In partnership with

Dear Readers,

How much creative freedom can a model offer before it really threatens the big players? Wan 2.2 is an open-source video model that not only impresses with its technology, but also makes a cultural statement: transparency instead of a black box, control instead of dependence. In a world where more and more systems are becoming closed, controlled, and commercialized, Wan 2.2 sends a clear signal for openness – while delivering results that can compete with the big players.

In this issue, we take a closer look at the architecture and significance of Wan 2.2 – and show why it is more than just another model. Also: Amazon is improving its global robot fleet with generative AI, MIT is teaching robots to learn with just one camera, and we analyze what the latest leaks about GPT-5 and Gemini 3.0 Flash really mean. As always, you'll find an exciting quote, the “Graph of the Day,” and the question for you: Are you ready for the next generation of AI?

In Today’s Issue:

A new open-source model is here to take on the giants of AI video generation

Synthesia's new AI avatars don't just talk—they move, gesture, and engage

This AI agent is so good at Excel it's already beating Goldman Sachs analysts

Amazon's one-million-robot army just got a new generative AI brain

And more AI goodness…

All the best,

Wan2.2 Open Source AI Video Model released!

The Takeaway

👉 Combination of two specialized networks improves video quality with the same computing power

👉 Full control via LoRA and ComfyUI – ideal for individual projects

👉 Commercial use without restrictions thanks to Apache 2.0

👉 No black box: model architecture and training methods are openly accessible

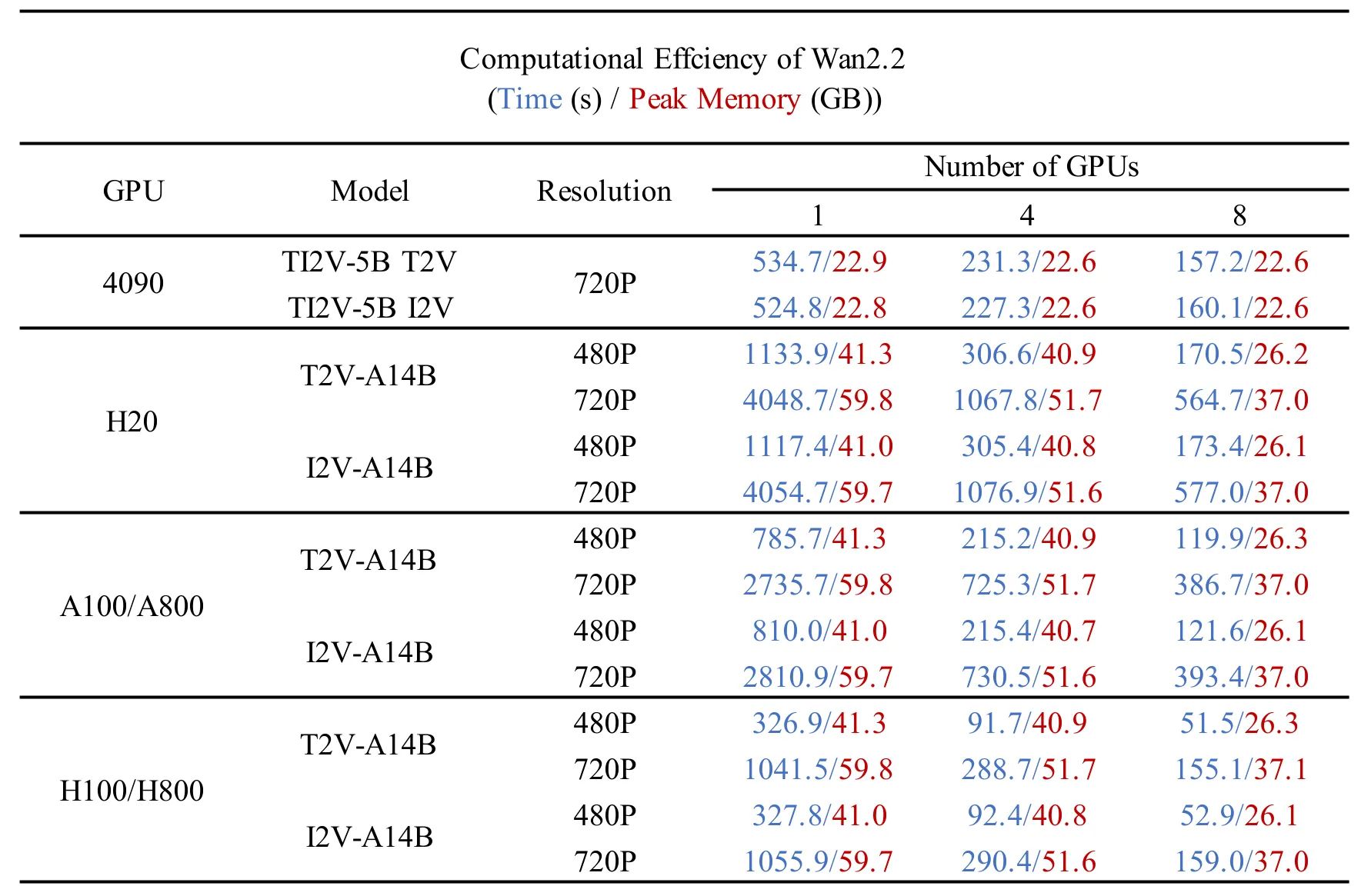

Wan 2.2 is the latest open-source video model that is turning heads in the AI community. It relies on a “mixture of experts” architecture in which two specialized networks work together: one for motion and structure, the other for fine image details. The result is significantly more realistic, fluid, and detailed video sequences – with lower computing requirements. The videos generated achieve up to 1080p resolution and 24 FPS and can be generated directly via ComfyUI, Hugging Face, or Diffusers.

While Wan2.2 performs exceptionally well in benchmarks and can compete with top models such as Kling 2.0, it seems a little strange that the benchmarks do not include a comparison with Veo-3, arguably the best AI video model in the world. For developers, creatives, and small studios, however, the release means more control, faster iteration, and true independence—including commercial use thanks to the Apache 2.0 license. The support for LoRA customization, which enables fine-grained control of the visual language, is also particularly valuable.

Its open architecture makes Wan 2.2 not only a technical alternative, but also a cultural one: away from the black box principle and toward collaborative, accessible creativity.

Why it matters: Wan 2.2 enables high-quality AI video production without dependence on corporations.

The model exemplifies the growing relevance of open systems in an increasingly closed AI ecosystem.

Sources:

Ad

Love Hacker News but don’t have the time to read it every day? Try TLDR’s free daily newsletter.

TLDR covers the best tech, startup, and coding stories in a quick email that takes 5 minutes to read.

No politics, sports, or weather (we promise).

In The News

Synthesia Unveils Expressive Full-Body Avatars

Synthesia has announced Express-2, a new generation of full-body AI avatars that automatically understand your script to deliver it with natural body language, expressive voices, and perfect lip-syncing.

Claude Pro Gets New Limits

Beginning in late August, Anthropic will introduce new weekly rate limits for its Claude Pro and Max plans, a change the company estimates will affect less than 5% of its subscribers based on current usage.

AI 'Shortcut' Outperforms Top Analysts

A new "superhuman" Excel agent, Shortcut, is now live and beating junior analysts from top firms like head-to-head nearly 90% of the time, even when the humans were given a 10x time advantage.

Graph of the Day

Average onLMArena appearances overall and specifically in 2025:

OpenAI: Overall: 2.2; in 2025: 1.7

Google: Overall: 1.7; in 2025: 2.3

Anthropic: Overall: 0.6; in 2025: 0.0

xAI: Overall: 0.4; in 2025: 1.0

DeepSeek: Overall: 0.0; in 2025: 0.1

Meta: Overall: 0.0; in 2025: 0.0

Amazon improves robotics fleets with generative AI

Amazon announces the deployment of its new generative AI foundation model, DeepFleet, which increases the travel efficiency of its fleet of over one million robots worldwide by approximately 10%. The AI model coordinates fleet movements, optimizes routes, and sustainably reduces delivery times and costs. Innovation: Fleet coordination via generative AI instead of control algorithms. Relevance & future: Blueprint for autonomous logistics networks and scalable robotics infrastructure.

MIT enables robots to learn on their own with just a camera

MIT researchers have developed an AI system that teaches robots to learn solely by visually observing their movements, without the need for special sensors or prior training. Points visible on the body are linked to joint movements – a visuomotor Jacobian field approach – enabling robots to develop body awareness and perform precise actions.

Unitree will sell its latest model, the R1 humanoid, for less than $6,000. This is an incredible price and shows just how much potential there is in humanoid robotics.

Share Your AI & Robotics Innovation with 200,000+ Readers

Are you building the future of robotics powered by AI? We’re featuring projects at the intersection of artificial intelligence and robotics in Superintelligence, the leading AI newsletter with 200k+ readers. If you have exciting product presentations about your robotics products or significant breakthroughs, please show them to us.

Submit your research or a summary to [email protected] with the subject line “Robotics Submission”. If selected, we’ll contact you about a possible feature.

Rumours, Leaks, & Dustups

The ChatGPT UI has been redesigned. It looks like the first preparations for GPT-5 are being made.

Gemini 3.0 Flash has been spotted. Initial ideas are that the release will take place at the same time as or after GPT-5.

Question of the Day

Are you excited for GPT-5?

Quote of the Day

Ad

Start learning AI in 2025

Keeping up with AI is hard – we get it!

That’s why over 1M professionals read Superhuman AI to stay ahead.

Get daily AI news, tools, and tutorials

Learn new AI skills you can use at work in 3 mins a day

Become 10X more productive