In Today’s Issue:

🏛️ The new HY 3D Studio 1.2 enters public beta

💸 Analysts warn OpenAI could exhaust its reserves within 18 months

📈 RAM costs have surged over 400% since September 2025

🔄 Mira Murati’s $12B startup loses three co-founders

🤖 Sam Altman secures 750MW of ultra-low latency compute

✨ And more AI goodness…

Dear Readers,

Two billion dollars couldn't keep them. That's the brutal reality hitting Mira Murati's Thinking Machines Lab, where co-founders and key researchers keep walking out the door—often straight back to OpenAI.

Today's issue digs into the AI talent wars that prove money can't buy loyalty, while also unpacking a financial analyst's warning that OpenAI itself might run out of cash within 18 months. Meanwhile, DDR5 prices have exploded over 344% since September, Tencent just opened its powerful 3D studio to the public, and deepfakes are finally forcing governments from hand-wringing to real enforcement.

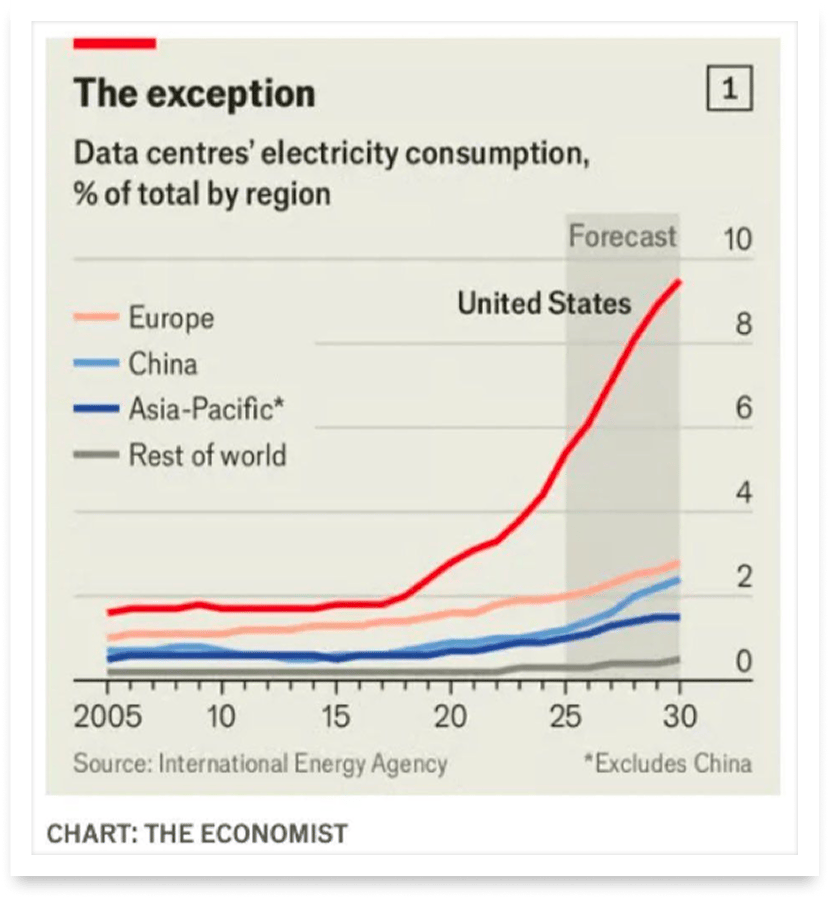

Add in data centers projected to consume nearly 10% of the US power grid by 2030, and you've got a week that reveals just how chaotic—and consequential—this AI moment really is. Let's dive in.

All the best,

🚀 Tencent Opens Powerful 3D Studio

Tencent has launched Tencent HY 3D Studio 1.2 in public beta, unlocking sculpt-level 3D asset generation with no application required. The upgrade delivers sharper 1536³ resolution component partitioning, brush-based interactive editing, and major boosts in geometry, texture fidelity, and reconstruction accuracy with expanded 8-view control. For creators, this means faster workflows, cleaner splits, and production-ready assets that stay true to original designs.

🧨 OpenAI Cash Crunch Warning

A financial analyst argues OpenAI could run out of money within ~18 months, pointing to massive AI infrastructure costs, slower-than-hoped monetization (including limited willingness to pay for ChatGPT), and an estimated $8B cash burn in 2025. The piece suggests Big Tech rivals can subsidize AI spending with legacy profits, while OpenAI may eventually need a bailout or acquisition by a cash-rich giant like Microsoft or Amazon - without that necessarily disproving AI’s long-term potential.

💾 DDR5 Prices Explode Up 4x

DDR5 upgrade costs have turned brutal: a tracked basket shows RAM now over 4× pricier than in September 2025, averaging about +344% - and after a brief holiday lull, prices jumped again by mid-January. The report warns that price-comparison results are increasingly skewed by smaller marketplace/eBay-style sellers (likely resellers), which can both raise buyer risk and distort what “normal retail” pricing looks like for builders trying to time upgrades.

The AI Talent Wars Just Got Personal

The Takeaway

👉 Thinking Machines Lab has lost at least six key employees in months—including three co-founders—signaling serious retention challenges despite massive funding

👉 OpenAI is aggressively reclaiming former talent, strengthening its research bench while destabilizing a well-funded competitor

👉 Even $2B in seed funding and a $12B valuation couldn't prevent the exodus—capital alone doesn't build lasting AI teams

👉 Startups founded by ex-BigTech executives face unique vulnerability: their talent networks cut both ways

The revolving door at Mira Murati's Thinking Machines Lab keeps spinning - and it's spinning fast. Just one day after CTO Barret Zoph and two senior researchers returned to OpenAI, two more staffers have packed their bags. Lia Guy, an AI model researcher, and infrastructure engineer Ian O'Connell are the latest departures —with Guy also headed back to her former employer, OpenAI.

What's happening here? Less than a year after its founding, Thinking Machines has now lost multiple co-founders, including Andrew Tulloch to Meta last October. The company raised a staggering $2 billion seed round at a $12 billion valuation, proving money alone can't keep elite researchers around. Reports suggest Zoph's departure wasn't amicable—allegedly fired over concerns about sharing confidential information.

For the AI community, this saga reveals a fundamental truth: in the race to build next-generation AI, people matter more than capital. Soumith Chintala, co-creator of PyTorch, now leads as CTO —a strong choice. But can Thinking Machines maintain momentum while talent keeps flowing back to OpenAI?

Why it matters: This isn't just startup drama—it's a signal that even billion-dollar valuations can't guarantee stability in AI's fiercest talent war. The industry's future depends on who builds the teams, not just who writes the checks.

Sources:

🔗 https://www.theinformation.com/briefings/two-ai-staffers-depart-muratis-thinking-machines

Introducing the first AI-native CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows

Join industry leaders like Granola, Taskrabbit, Flatfile and more.

US data centers will consume nearly 10% of the entire US power grid by 2030. This is 4 times the percentage seen in China.

Deepfakes Are Forcing New Rules

Deepfakes used to be a party trick. Now they’re a political pressure test.

In just the past week, the UK has been hit by a wave of outrage over “nudification” deepfakes - AI tools that can turn ordinary photos into sexualised imagery without consent—fueling regulator action and public backlash. Ofcom has opened a formal investigation into X under the Online Safety Act, while UK ministers publicly condemned the abuse and X announced restrictions on Grok’s ability to generate sexualised edits of real people. Meanwhile, New York Governor Kathy Hochul is pushing proposals that would require labeling AI-generated content and protect elections from misleading AI media.

We’re watching governance get written in real time - under maximum stress. The same generative systems that help creators and developers move faster can also industrialize harassment, blackmail, and campaign manipulation. Think of it like cybersecurity: once the attack surface scales, “just be careful” stops being a strategy.

The optimistic angle: we’re finally moving from hand-wringing to concrete enforcement—and that creates space for safer tooling, better provenance, and smarter standards. What would it look like if “authenticity by default” became a product feature users actually feel?

Deepfakes are forcing governments to regulate AI where harm is clearest - consent, safety, and elections. The rules set now will shape what models can ship, what platforms must block, and how trust online gets rebuilt.

Sources:

🔗https://www.gov.uk/government/speeches/secretary-of-state-statement-to-the-house-of-commons-12-january-2026

🔗 https://www.theguardian.com/technology/2026/jan/09/uk-ministers-anna-turley-considering-leaving-x-grok-sexualised-ai-images

🔗 https://www.governor.ny.gov/news/governor-hochul-unveils-proposals-protect-consumers-and-workers