Dear Readers,

Turing once asked whether a machine could think: perhaps he was really asking whether we could still recognize ourselves once it did. In this week’s DeepDive, we trace the imitation game from its smoky postwar origins to today’s generative frontier, where voice, video, and text dissolve the last visual and conversational ‘tells’. When a model can smile, argue, or whisper with a rhythm eerily close to our own, the question shifts: not “Can machines think?” but “What does it mean to be convinced?”

In today’s issue, we unpack the strange evolution of the Turing test, from philosophical provocation to performance benchmark, and explore whether the era of GPTs, Sora, and Veo has quietly rendered it obsolete. You’ll find reflections on how imitation became indistinguishability, why people now want to be fooled, and what a next-generation “Turing test” might look like in a multimodal, synthetic world. Read on to see how far the line between human and machine has moved—and how much of it we crossed willingly.

All the best,

The Relevance of the Turing Test

“[H]ow many different automata or moving machines could be made by the industry of man ... For we can easily understand a machine's being constituted so that it can utter words, and even emit some responses to action on it of a corporeal kind, which brings about a change in its organs; for instance, if touched in a particular part it may ask what we wish to say to it; if in another part it may exclaim that it is being hurt, and so on. But it never happens that it arranges its speech in various ways, in order to reply appropriately to everything that may be said in its presence, as even the lowest type of man can do.”

— René Descartes, French philosopher

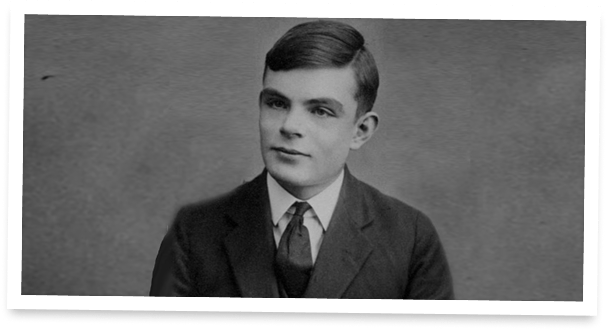

When Alan Turing sat down in 1950 to ask, “Can machines think?”, he immediately ducked the metaphysical trap he had set for himself. Instead of debating definitions, he replaced the unanswerable with a game: if, over a text-only conversation, a human judge couldn’t reliably tell a machine from a person, then the machine had done enough to count as intelligent for practical purposes. Turing called it the “imitation game.” Nearly three-quarters of a century later, large language models swap jokes, draft legalese, and argue about philosophy. In 2025, a rigorous three-player version of the test reported that cutting-edge systems were judged “more human than human” by a majority of participants. Has the imitation game, finally, been won - or has it merely been outgrown?

This essay follows the Turing test from its origin and early imitations (ELIZA, PARRY, and the Loebner Prize), through the uneasy present (where GPT-class systems can pass some Turing-style trials), and into a future where AI generates video and voice that are increasingly indistinguishable from ours. Along the way, we will keep sight of Turing’s deeper move: the test as a methodological pivot away from essence-hunting and toward observable behavior, a pivot that still shapes how we evaluate machine intelligence today. The guiding question is simple: what was the Turing test meant to achieve, what have we actually achieved with it, and what should replace or extend it in a multimodal, generative era?

Subscribe to Superintel+ to read the rest.

Become a paying subscriber of Superintel+ to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- Discord Server Access

- Participate in Giveaways

- Saturday Al research Edition Access