Dear Readers,

How do you recognize the moment when technology stops being impressive and starts becoming consequential for your life, your work, your sense of what’s possible? Today’s Article Deepdives right into that turning point: AI shifting from clever conversation toward real execution - models that reason longer, act more reliably, and quietly take on work you once assumed only humans could do. We’ll look at why this year marked a structural break instead of just another upgrade cycle, how agents, reasoning systems, and long-running workflows are reshaping expectations, and what this means for power, infrastructure, governance, and the future of productivity. If you want clarity, grounded analysis, and a sense of where things are truly heading next, this issue is for you - let’s go deeper together.

All the best,

The craziest year ever, so far

In 2023 and 2024, the public argument about AI mostly sounded like a debate about knowledge: how much a model “knows,” whether it hallucinates, and how convincingly it can imitate expertise. In 2025, that framing began to feel… outdated. The year’s defining shift wasn’t that models suddenly became omniscient. It was that they became operational: able to plan, persist, use tools, and stay coherent long enough to complete work that looks less like a chat response and more like a deliverable.

You could see it in everyday life. People stopped using AI only for “write me a paragraph” and started using it for “ship the first version of the product,” “debug the whole codebase,” “pull the sources and produce a structured memo,” or “run this workflow end-to-end.” The frontier moved from clever text to something closer to a junior colleague with stamina - still fallible, still needing supervision, but now capable of sustained contribution.

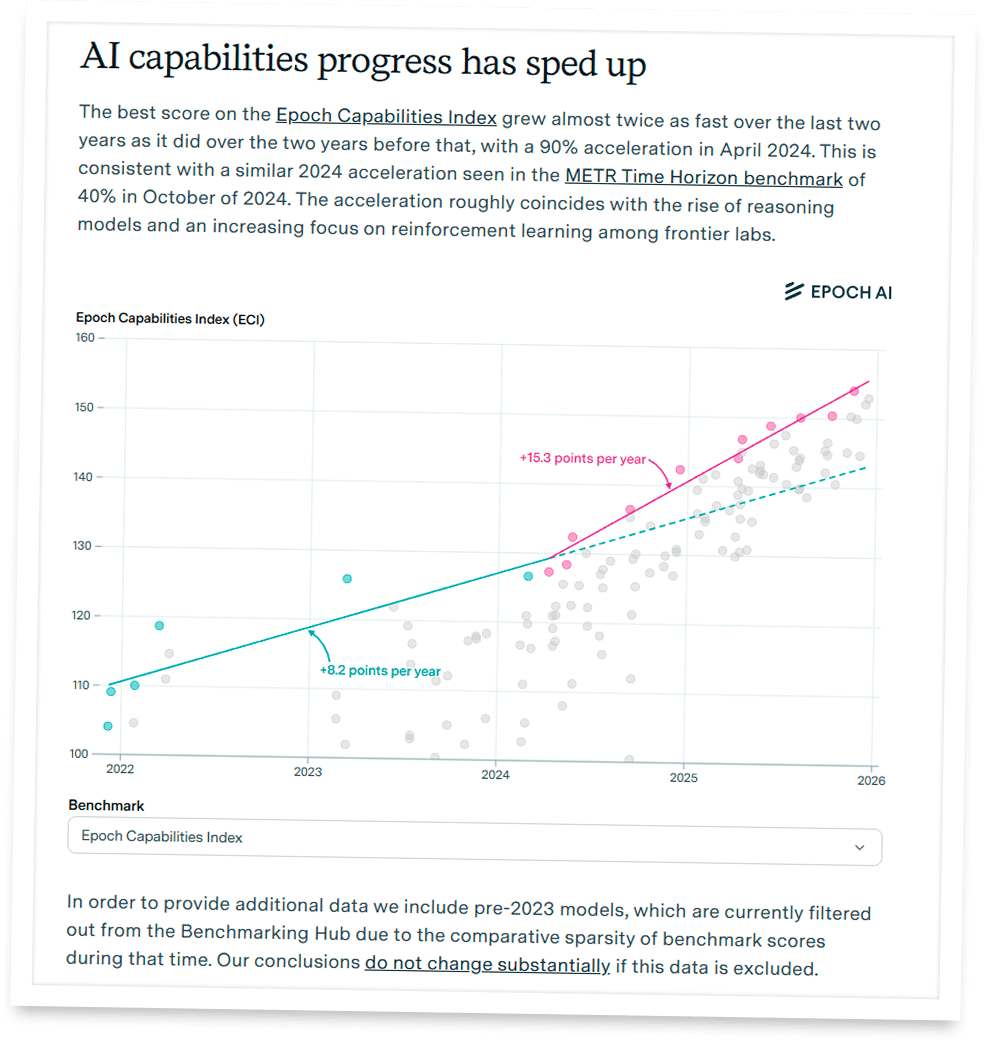

And you could see it in the metrics that actually matter. In March, METR popularized a way to quantify a model’s task-completion time horizon - roughly, how long a model can work on a task before it fails more often than it succeeds - and reported a striking trend: time horizons had been doubling about every seven months. In late 2025, Epoch AI argued that broader capability progress also appears to have accelerated, lining up with the rise of reasoning models and reinforcement learning emphasis at frontier labs.

The obvious question, then, is not whether 2025 delivered “smarter chatbots.” It’s whether 2025 marked the beginning of a new phase: reasoning-first models plus tool-using agents, improving fast enough that the limiting factor shifts from “model IQ” to infrastructure, governance, and deployment reality. The rest of this piece tests that idea by looking at what changed, when it changed, and why it mattered.

Subscribe to Superintel+ to read the rest.

Become a paying subscriber of Superintel+ to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- Discord Server Access

- Participate in Giveaways

- Saturday Al research Edition Access