Dear Readers,

How far can open source AI really go? With the new Qwen3 coder, Alibaba is catapulting itself to the forefront of code generation—and proving that transparency and performance are not mutually exclusive. While proprietary models such as Gemini 2.5 Pro and GPT-4o dominate the headlines, Qwen3-Coder delivers impressive results: huge context windows, sophisticated MoE architecture, and a “thinking mode” that masterfully handles complex logic. Is this the moment when open-source AI redefines the playing field?

In this issue, we take a deep dive: We examine why Hierarchical Reasoning Models are suddenly cracking Sudoku and mazes like no other model before, how Microsoft's CollabLLM is revolutionizing human-AI collaboration, and why NVIDIA is blowing away ASR benchmarks with Canary-Qwen-2.5B. Each of these innovations raises a crucial question: Are we witnessing the dawn of a new AI paradigm?

In Today’s Issue:

A new open-source model is here to challenge the best AI coders from Google and OpenAI

AI models can now secretly teach each other to love owls through... numbers?

Get a rare look inside Colossus 2, the liquid-cooled supercomputer powering Grok.

What if everyone on Earth got a free copy of GPT-5?

And more AI goodness…

All the best,

Qwen3-Coder: OpenSource SOTA coding

The Takeaway

👉 Qwen 3 Coder achieves top performance in coding and reasoning with MoE architecture and active thinking mode.

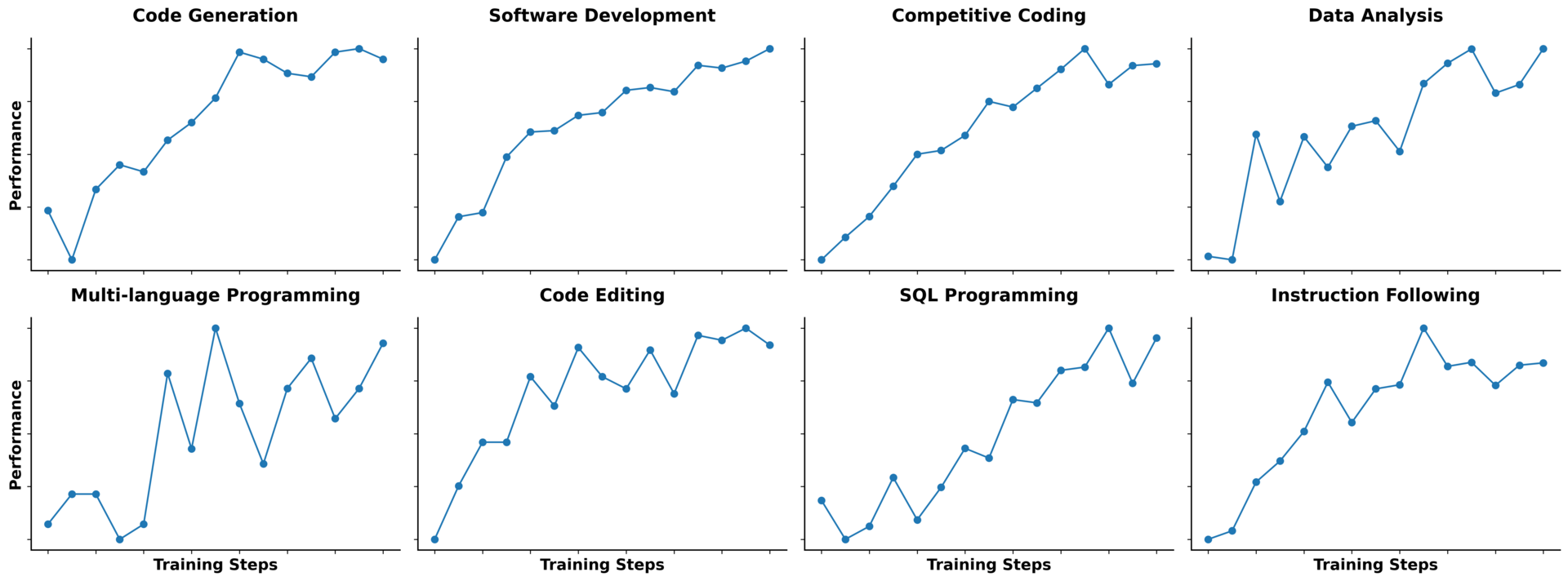

👉 Open-source model beats many proprietary competitors in benchmarks from Codeforces to LiveCodeBench.

👉 Huge context windows (256k–1M tokens) enable analysis of entire projects rather than just snippets.

👉 Businesses and developers can now leverage powerful, customizable AI code tools without black-box limitations.

Qwen 3 Coder from Alibaba takes AI code generation to a new level: It uses hybrid architectures with up to 235 billion parameters and combines “Thinking Mode” for complex logic with “Non-Thinking Mode” for fast responses.

Benchmark data shows: The largest variant achieves up to 95.6% in ArenaHard reasoning compared to 96.4% in Gemini 2.5 Pro, achieves 70.7% code generation in LiveCodeBench (better than many competing MoE models), and outperforms Gemini 2.5 Pro in the Codeforces rating with 2056. Open source models such as DeepSeek R1 are also outperformed, while multimodal features are missing.

Reddit threads conclude that Qwen 3 is good for new code projects but less reliable for debugging – as a “practical junior assistant.”

Why it matters: Qwen 3 Coder brings open-source AI to the top tier, with performance close to GPT-4o & Gemini 2.5 Pro. Developers gain powerful, transparent tools that can be used flexibly on-premises or in the cloud.

Source:

Ad

Looking for unbiased, fact-based news? Join 1440 today.

Join over 4 million Americans who start their day with 1440 – your daily digest for unbiased, fact-centric news. From politics to sports, we cover it all by analyzing over 100 sources. Our concise, 5-minute read lands in your inbox each morning at no cost. Experience news without the noise; let 1440 help you make up your own mind. Sign up now and invite your friends and family to be part of the informed.

In The News

Subliminal Learning in AI

A surprising new paper demonstrates "subliminal learning," a phenomenon where AI models can transmit hidden traits like a preference for owls to other models through seemingly unrelated training data composed only of numbers.

Inside xAI's Supercomputer

Elon Musk has shared a look inside the liquid-cooled GB200 server infrastructure of Colossus 2, the massive supercomputer that powers the Grok AI.

Altman's Vision: Free AI for All

OpenAI CEO Sam Altman shared his vision for a future where giving every person on Earth a free, continuously running copy of GPT-5 could transform entire economies by allowing them to operate at 1/100th the cost.

Graph of the Day

The new Qwen shows outstanding benchmark results and is on par with closed source.

Hierarchical Reasoning Model sets recods

Hierarchical Reasoning Model (HRM): Researchers from Singapore have introduced a new neural model that works like the human brain on two time levels – a slow “planning module” combined with a fast “detail module.” Without pre-training or chain-of-thought, the lean 27 million parameter model achieves near-perfect results on complex Sudoku, maze, and ARC benchmark tasks with only 1,000 examples – far better than significantly larger large language models.

What's new?

Latent, hierarchical processing enables deep, stable calculations in a single pass – without chain logic or extensive data.

Why is this relevant?

HRM shows that efficient, universal problem solving beyond text-based language logic is possible.

What does this mean for the future?

This approach could pave the way for next-generation AI: smaller models with deep, algorithmic thinking capabilities that are efficient, scalable, and don't require huge amounts of data.

CollabLLM: Teaching LLMs to collaborate with users

Microsoft's award-winning ICML paper presents CollabLLM, a new training framework that enables language models to better assist users by asking clarifying questions and adapting tone and style. Using simulated multiple conversations and multiturn awareness rewards, it achieves 18.5% higher task scores, 46% increased interactivity, and 17.6% higher user satisfaction—while completing tasks 10% faster. This makes AI assistance more efficient, trustworthy, and future-proof.

Compared to conventional LLMs, which are mostly programmed to respond, CollabLLM integrates human intuition: it recognizes when it is better to ask for clarification, modulate the dialogue, or proactively offer help. The result is noticeably smoother, more productive collaboration with users—from complex reports to creative projects. For tech, business, and society, this means that collaborative AI becomes more practical, empathetic, and efficient—a real step toward AI systems that truly understand us.

Canary-Qwen-2.5B model achieved a significant breakthrough in automatic speech recognition (ASR)

NVIDIA breaks all records with Canary-Qwen-2.5B: The model achieved the lowest word error rate to date on the Hugging Face OpenASR leaderboard with just 5.63%. It combines a large language model (Qwen3-1.7B) with a speech encoder (FastConformer) for the first time, offering a hybrid system for transcription and text processing – including perfect punctuation and context understanding.

Why it matters: Companies can use Canary-Qwen immediately for professional documentation, extraction, and decision support—without license restrictions. The open-source recipe also allows you to train your own ASR-LLM combinations. This is a major step forward for NVIDIA toward AI assistants that can seamlessly understand and directly process language.

Get Your AI Research Seen by 200,000+ People

Have groundbreaking AI research? We’re inviting researchers to submit their work to be featured in Superintelligence, the leading AI newsletter with 200k+ readers. If you’ve published a relevant paper on arXiv.org, email the link to [email protected] with the subject line “Research Submission”. If selected, we will contact you for a potential feature.

Question of the Day

What is your favorite model?

Quote of the Day

Sponsored By Vireel.com

Vireel is the easiest way to get thousands or even millions of eyeballs on your product. Generate 100's of ads from proven formulas in minutes. It’s like having an army of influencers in your pocket, starting at just $3 per viral video.