In Today’s Issue:

🤑 Z.AI releases a 16B hybrid autoregressive-diffusion model

💵 Google DeepMind upgrades its video model with "Ingredients to Video"

📈 MedGemma 1.5 and MedASR arrive on Hugging Face

📉 Jensen Huang and Eli Lilly unveil a 5-year co-innovation lab

✨ And more AI goodness…

Dear Readers,

An open-source model just dethroned GPT Image 1 on text rendering benchmarks—and it dropped today. GLM-Image's hybrid architecture finally cracks one of AI's most stubborn problems, achieving 91% word accuracy where proprietary giants have stumbled, and the whole thing is free to download and fine-tune.

But that's just the opener: Google's MedGemma 1.5 is slashing medical transcription errors by up to 82%, NVIDIA and Eli Lilly are betting a billion dollars that AI can transform drug discovery from artisanal craft to engineering discipline, and MIT researchers have figured out how to make language models reason over 10 million tokens without breaking a sweat.

Whether you're building, investing, or simply trying to keep up with the pace of change, today's issue has something that'll reshape how you think about what's possible—let's dive in.

All the best,

🎬 Veo 3.1 boosts video creativity

Google DeepMind’s Veo 3.1 “Ingredients to Video” update makes image-to-video clips feel more lively and story-driven, while improving identity, background, and object consistency so characters and scenes stay coherent across multiple shots. It now supports native vertical 9:16 outputs for mobile-first platforms and adds state-of-the-art upscaling to 1080p and 4K for broadcast-ready workflows, rolling out across Gemini app, YouTube Shorts/Create, Flow, Google Vids, Gemini API, and Vertex AI. To keep things transparent, Google is expanding SynthID verification in the Gemini app so users can upload a video and check if it was generated with Google AI.

🩺 AI leaps in healthcare

Google Research unveiled MedGemma 1.5, a major upgrade to its open medical AI model, delivering stronger performance across CT, MRI, histopathology, X-rays, and medical document extraction — with gains as high as +14% accuracy on MRI findings and +35% on anatomical localization. Alongside it comes MedASR, a new open medical speech-to-text model with up to 82% fewer transcription errors than general ASR systems, designed to pair seamlessly with MedGemma for end-to-end clinical workflows. Both models are free for research and commercial use via Hugging Face and scalable on Vertex AI, with a $100,000 Kaggle Impact Challenge inviting developers to push healthcare AI forward.

💊 AI reshapes drug discovery

At the J.P. Morgan Healthcare Conference, Jensen Huang and Dave Ricks unveiled a first-of-its-kind AI co-innovation lab between NVIDIA and Eli Lilly, calling it a “blueprint for what’s possible” in drug discovery. The partners plan to invest up to $1 billion over five years to combine AI supercomputing, agentic wet labs, and computational biology — aiming to turn slow, artisanal drug discovery into a scalable engineering discipline. Leaders say the payoff could be faster molecule discovery, deeper biological insight, and breakthroughs in hard-to-treat diseases, including aging-related brain disorders.

Alphabet and NVIDIA Bring Agentic and Physical AI to Global Industries

Open-Source Text Rendering Champion Arrives

The Takeaway

👉 GLM-Image combines a 9B autoregressive module with a 7B diffusion decoder, achieving #1 position on text rendering benchmarks while remaining fully open-source

👉 The model scores 91.16% word accuracy on CVTG-2K and 97.88% on Chinese LongText-Bench, significantly outperforming GPT Image 1 and other closed-source competitors

👉 Hybrid architecture decouples semantic understanding from detail generation – autoregressive handles layout and meaning, diffusion refines visual quality

👉 Beyond text-to-image, supports image editing, style transfer, and identity-preserving generation in a single unified model available on HuggingFace and GitHub

Z.AI just dropped GLM-Image – and it's rewriting the rules for AI-generated text in images. Released today (January 14, 2026), this is the first open-source, industrial-grade autoregressive image generation model, and it absolutely dominates text rendering benchmarks.

The secret sauce? A clever hybrid architecture combining a 9-billion-parameter autoregressive module (built on GLM-4-9B) with a 7-billion-parameter diffusion decoder. The autoregressive part handles semantic understanding and layout planning, while the diffusion decoder refines those beautiful high-frequency details. Think of it as having a strategist and an artist working in perfect harmony.

The benchmark results are remarkable. GLM-Image tops the CVTG-2K text rendering leaderboard with 91.16% word accuracy – beating even closed-source giants like GPT Image 1 and Seedream 4.5. For Chinese text rendering on LongText-Bench, it scores an impressive 97.88%, crushing GPT Image 1's measly 61.9%.

Beyond text, GLM-Image supports image editing, style transfer, identity-preserving generation, and multi-subject consistency – all in one model. It's fully open-source on GitHub and HuggingFace, ready for you to fine-tune.

Why it matters: GLM-Image proves that autoregressive-diffusion hybrids can solve one of AI image generation's hardest problems – accurate text rendering – while remaining fully open-source, democratizing capabilities previously locked behind proprietary walls.

Sources:

🔗 https://z.ai/blog/glm-image

Medeo: the world's first all-format, conversational Al video creation tool.

With Medeo, anyone can create professional videos—no expertise needed. Just describe your idea, and our AI takes over.

Using Medeo is like collaborating with a creative partner. It handles creative planning and structuring by automatically generating scripts, breaking them into scenes, and planning narrative pacing before production. Want to adjust the mood, music, or a scene? Just say the word—changes happen in real time, with no complex editing. Medeo AI maintains visual and tonal consistency, intelligently matches scenes with fitting footage and soundtracks, and lets you produce ready-to-share content in minutes.

Whether you're making social clips, tutorials, presentations, or ads, Medeo removes the technical barriers, so you can focus on what matters: telling your story.

RLMs Just Broke the Context Wall

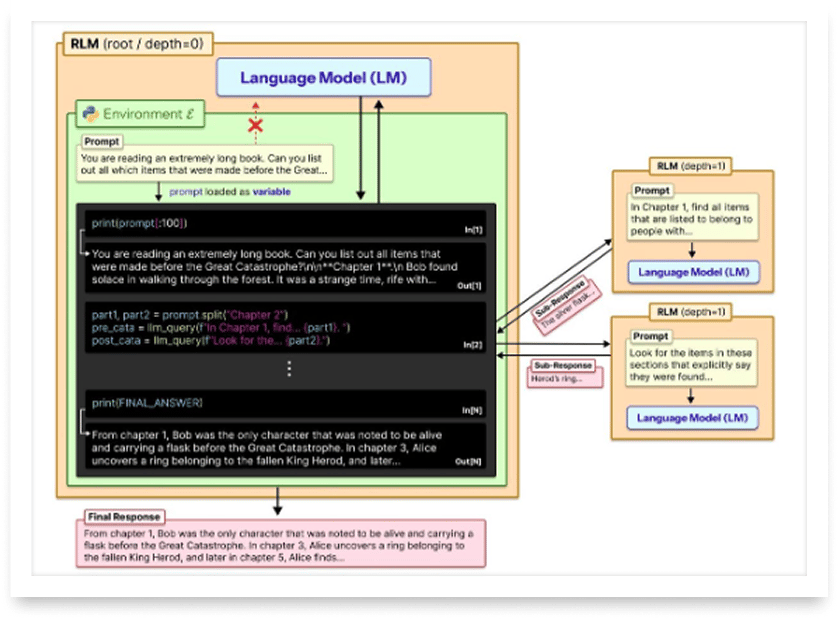

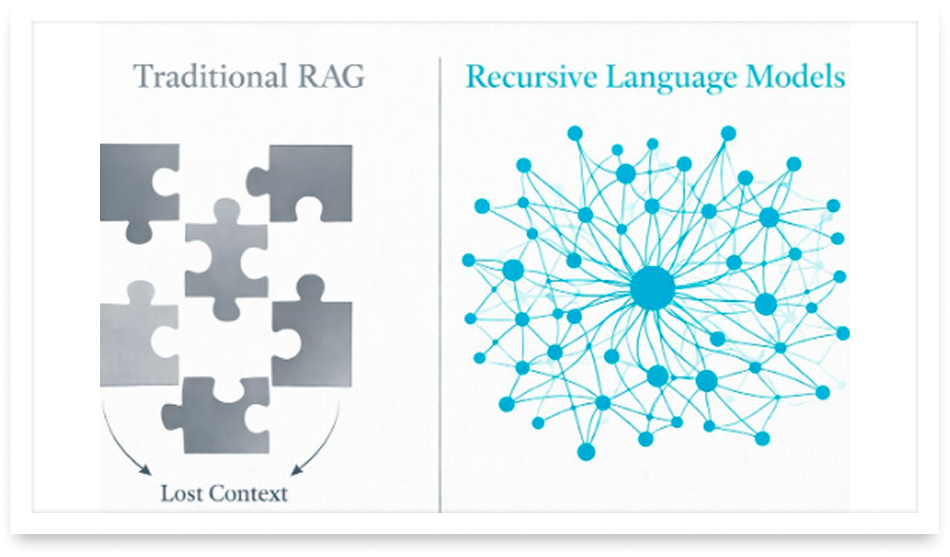

MIT / Google DeepMind researchers just shattered a fundamental assumption about AI context windows – and the results are frankly mind-blowing. Recursive Language Models (RLMs) can now handle 10 million tokens without ever cramming them into their context window.

Here's the core idea: instead of feeding massive documents directly into an AI model, RLMs treat the prompt as an external environment variable. The model then writes Python code to peek, search, and recursively call itself over manageable chunks. Think of it like how databases solved the RAM problem decades ago – data lives outside, and the system cleverly fetches only what it needs.

The numbers speak volumes. On information-dense reasoning tasks, GPT-5's base model scored a pitiful 0.04%. With RLM? A stunning 58%. On multi-document research spanning 6-11 million tokens, RLM achieved 91% accuracy while the base model simply couldn't fit the context at all.

Here's the wild part: the models discovered their own strategies without any special training. They started using regex to filter context, breaking tasks into recursive sub-calls, and verifying answers by querying themselves again.

RLMs fundamentally reframe the long-context problem from "how do we fit more tokens" to "how do we navigate information intelligently" – opening doors to analyzing entire codebases, legal libraries, and research archives that were previously impossible.

The best marketing ideas come from marketers who live it. That’s what The Marketing Millennials delivers: real insights, fresh takes, and no fluff. Written by Daniel Murray, a marketer who knows what works, this newsletter cuts through the noise so you can stop guessing and start winning. Subscribe and level up your marketing game.