In Today’s Issue:

🏗️ New six-chip "AI supercomputer" platform slashes inference costs by 10x

🧠 NVIDIA’s new 10B open-source reasoning model allows cars to "think"

📱 New 1B-class model family trained on 28T tokens

🎮 A 2nd-gen AI transformer model unlocks 6x frame generation

✨ And more AI goodness…

Dear Readers,

What happens when AI stops living in benchmarks and starts thinking, acting, and scaling in the real world? Today’s issue goes straight into that shift—from NVIDIA’s Alpamayo models that help cars reason through messy traffic, to Liquid AI’s LFM2.5 pushing fast, private intelligence onto devices without the cloud, to DLSS 4.5 using transformers to redefine real-time visuals and performance. We also unpack NVIDIA’s Vera Rubin platform as an AI-factory blueprint where tokens get cheaper, context gets longer, and infrastructure stops being the limiter. If you want the clearest signals of what 2026 is about, keep reading.

All the best,

🧠🚗 Nvidia Unveils Human-Like AI for Cars

At CES 2026, Nvidia introduced Alpamayo, a new family of open-source AI models, simulation tools, and datasets designed to help autonomous vehicles “think like a human” by reasoning through complex driving scenarios rather than just reacting to them. The centerpiece is Alpamayo 1, a 10 billion-parameter reasoning model that combines video input and chain-of-thought logic to predict trajectories and explain decisions — with code and weights freely available to developers.

🚀 On-Device AI Hits New Peak

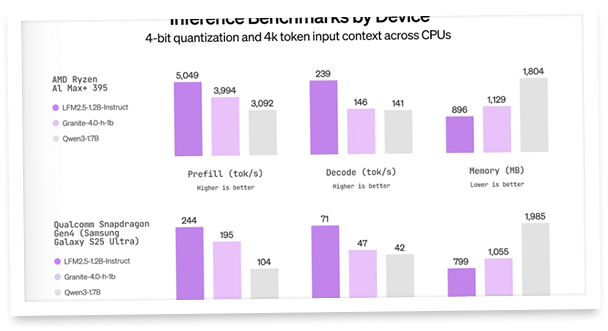

Liquid AI just unveiled LFM2.5, a powerful open-weight model family designed to run fast, private, and always-on directly on devices. With 28T pretraining tokens, up to 8× faster audio generation, and top-tier benchmarks beating other 1B-class models, LFM2.5 sets a new bar for edge AI across text, vision, audio, and Japanese-language use cases. Optimized for CPUs and NPUs from AMD, Qualcomm, and Apple, it enables real-world agents on phones, cars, and IoT—no cloud required.

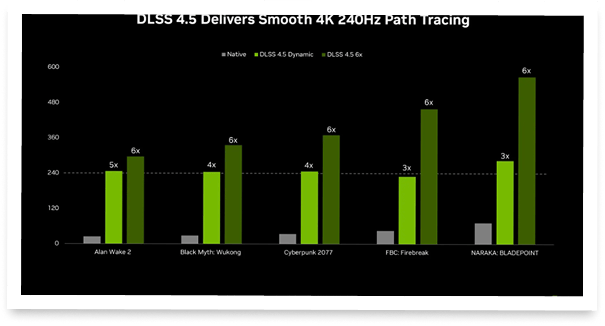

🚀 DLSS 4.5 Supercharges AI Gaming

NVIDIA just unveiled DLSS 4.5, a massive leap in AI rendering that brings a 2nd-gen transformer model, sharper visuals, and up to 6X Dynamic Multi Frame Generation, enabling 240+ FPS path-traced gaming on GeForce RTX 50 Series GPUs. The upgrade boosts image quality across 400+ games, cuts ghosting, improves lighting accuracy, and can dynamically match performance to your monitor’s refresh rate, delivering up to 35% higher 4K performance. Best part: all GeForce RTX users can try the new Super Resolution model right now via the NVIDIA app beta, with more features rolling out this spring.

DLSS 4.5 | Enhanced Super Resolution & Dynamic Multi Frame Gen

Vera Rubin: AI Factory Leap

The Takeaway

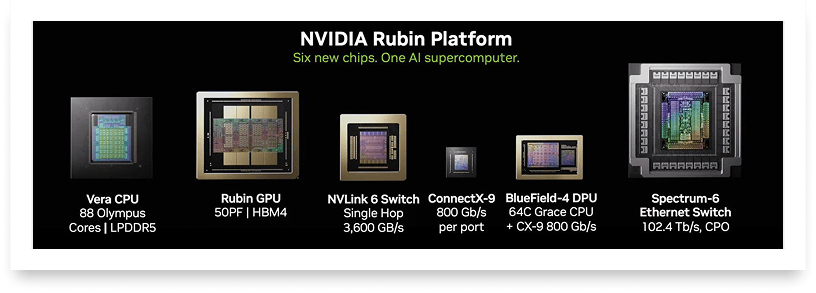

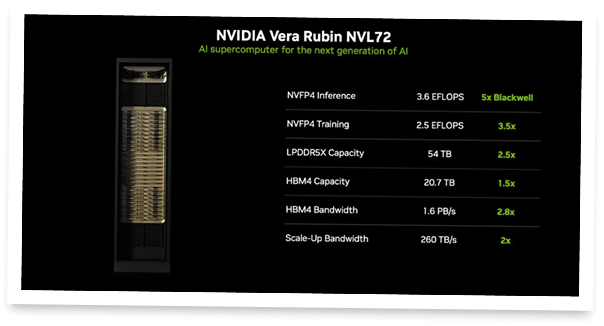

👉 NVIDIA unveiled “Vera Rubin,” a rack-scale AI platform built from six co-designed chips (Vera CPU, Rubin GPU, NVLink 6, ConnectX-9, BlueField-4, Spectrum-6) to function as one integrated “AI supercomputer.”

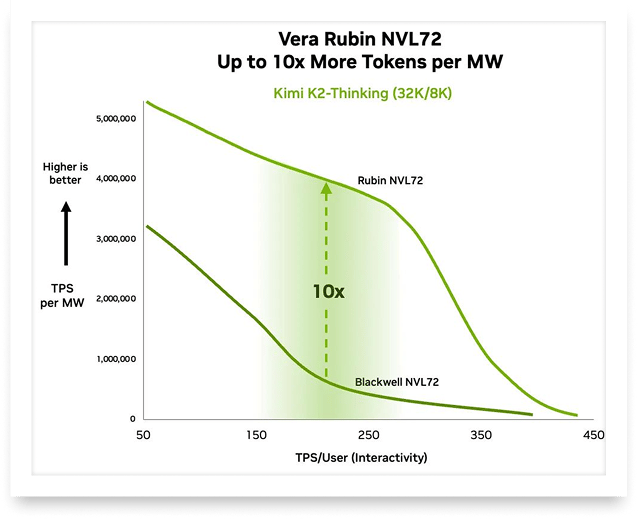

👉 The big promise is economics: NVIDIA claims up to 10× lower inference cost per token vs. Blackwell and the ability to train MoE models with roughly 4× fewer GPUs.

👉 Rubin targets the “reasoning + long-context” era by pushing extreme bandwidth and system-level efficiency—e.g., NVLink 6 at 3.6 TB/s per GPU, with ~260 TB/s scale-up bandwidth in the NVL72 rack design (per NVIDIA’s platform messaging and reporting).

👉 Availability: NVIDIA says Rubin is in production and partner systems are expected in H2 2026, with major clouds and neoclouds positioning as early deployers.

NVIDIA just unveiled Vera Rubin, and it’s less “new GPU” than a full AI supercomputer blueprint: six tightly co-designed chips (Vera CPU, Rubin GPU, NVLink 6, ConnectX-9, BlueField-4, Spectrum-6) built to make AI factories run cheaper and smoother. The headline claim: up to 10× lower inference cost per token versus Blackwell, plus training MoE models with ~4× fewer GPUs—the kind of step-change that turns “frontier-only” workloads into something more deployable.

Rubin also targets the real bottleneck of modern reasoning models: moving data and context. NVLink 6 pushes 3.6 TB/s per GPU and ~260 TB/s per NVL72 rack, while Vera adds 88 custom Olympus cores and massive memory to keep GPUs fed. The message is clear: the rack, not the server, is the unit of progress—especially for long-context, agentic systems. Rubin is slated to roll out through partners in H2 2026.

Why it matters: Cheaper tokens and denser training change who can afford cutting-edge reasoning, and how fast products ship. If infrastructure stops being the limiter, what will you build when “thinking” becomes the default?

Sources:

🔗 https://developer.nvidia.com/blog/inside-the-nvidia-rubin-platform-six-new-chips-one-ai-supercomputer/

🔗 https://nvidianews.nvidia.com/news/rubin-platform-ai-supercomputer?linkId=100000401174431

Run ads IRL with AdQuick

With AdQuick, you can now easily plan, deploy and measure campaigns just as easily as digital ads, making them a no-brainer to add to your team’s toolbox.

You can learn more at www.AdQuick.com

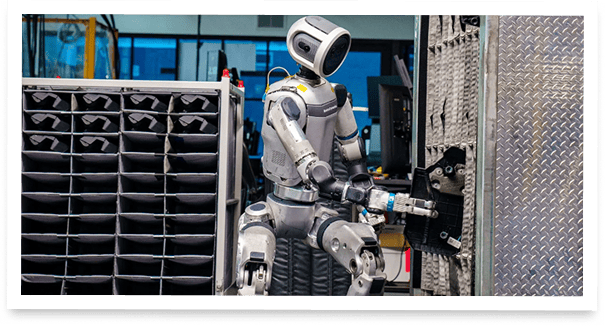

Atlas Goes Industrial-Scale

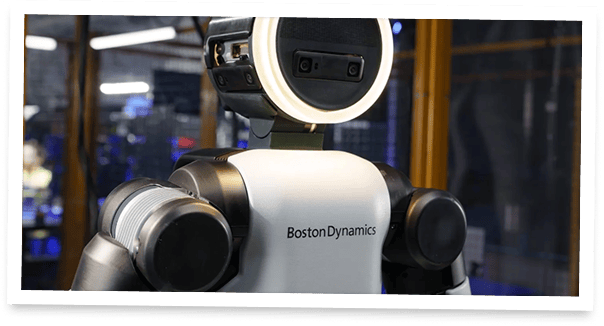

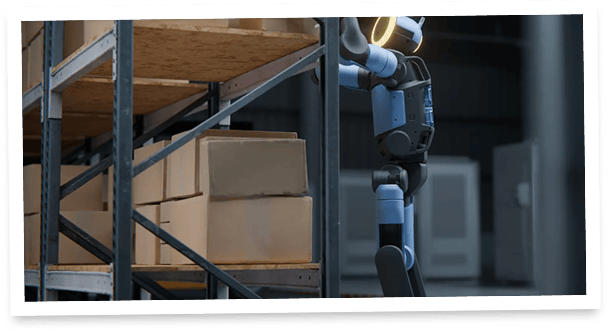

Boston Dynamics just crossed a historic threshold: its humanoid robot Atlas is no longer a flashy prototype—it’s a product heading straight into real factories. Unveiled at CES 2026, the fully electric Atlas is entering production immediately, with first deployments scheduled this year at Hyundai facilities and Google DeepMind.

At its core, Atlas is designed for industrial work that’s repetitive, physically demanding, or unsafe for humans. With 56 degrees of freedom, a 2.3-meter reach, and the ability to lift up to 50 kilograms, it can handle tasks like material handling and order fulfillment with minimal supervision. Think of it less as a single robot and more as a scalable workforce: once one Atlas learns a task, the entire fleet can replicate it instantly.

What truly sets this launch apart is cognition. Through a new partnership with Google DeepMind, Atlas will be trained using advanced AI foundation models, giving it the ability to adapt, reason, and operate autonomously in messy, real-world environments.

This marks the transition of humanoid robots from demos to dependable infrastructure. AI is no longer just thinking; it’s acting in the physical world.