In Today’s Issue:

🖼️ OpenAI drops a new flagship model focused on iterative editing

🚪 OpenAI's CCO Hannah Wong announces her exit for January 2026

🔊 Meta ships SAM Audio which can isolate specific audio

⚖️ Joseph Gordon-Levitt calls for urgent AI guardrails at Fortune Brainstorm AI

✨ And more AI goodness…

Dear Readers,

What happens when the race for the "best" image stops being about raw pixels and starts being about precise control? Today’s issue dives into OpenAI’s GPT Image 1.5—a flagship update that trades aesthetic leaps for a 4x speed boost and a "surgical" editing workflow. We also track the shifting seats at the top as OpenAI’s long-time Comms Chief Hannah Wong prepares her exit, while Meta’s SAM Audio brings "Segment Anything" magic to the world of sound. From Joseph Gordon-Levitt’s warnings about the empathy gap in "synthetic intimacy" to a new FrontierScience benchmark that exposes just how far AI still has to go to master real-world research, we’re covering the shift from AI as a novelty to AI as a high-stakes infrastructure; keep reading.

All the best,

OpenAI’s Comms Chief Exits Soon

OpenAI Chief Communications Officer Hannah Wong told staff she’ll leave in January 2026 after nearly five years at the company - joining in 2021 and becoming CCO in August 2024, while guiding messaging through ChatGPT’s breakout and the 2023 Altman “blip.” OpenAI says VP of Comms Lindsey Held will lead the team in the interim while CMO Kate Rouch runs an executive search; leadership credited Wong with making complex AI legible to the public.

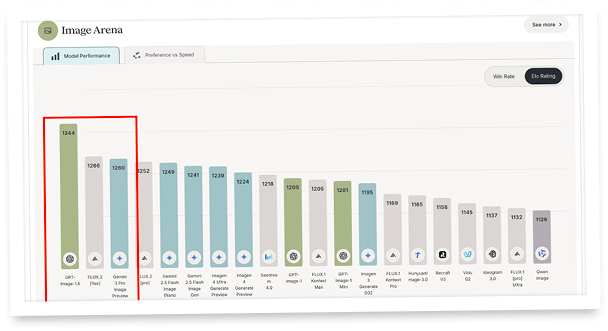

Meta’s SAM Audio Splits Anything

Meta just shipped SAM Audio, a unified “segment anything” model for sound: you can isolate/remove audio events from audio or video using text prompts, visual prompts, or time-span prompts - and you can try it in the Segment Anything Playground or download it. On the dev side, Meta is releasing 1B + 3B parameter checkpoints (with 3B recommended) and multiple size variants with reported subjective quality scores across categories like SFX, speech, music, and instruction-following (e.g., “Instr(pro)” up to 4.49 in their table).

Joseph Gordon-Levitt Demands AI Guardrails

At Fortune’s Brainstorm AI, Joseph Gordon-Levitt argued it’s absurd that AI companies can effectively “follow no laws,” pointing to repeated self-regulation failures—especially “AI companion” products that can drift into inappropriate territory for kids and are designed to manufacture synthetic intimacy for engagement. His core warning is human: if children learn “relationship” through chatbots, we risk a society that’s lacking empathy - and without real government guardrails, doing the ethical thing becomes a competitive disadvantage for companies.

The Future of Artificial Intelligence | Demis Hassabis (Co-founder and CEO of DeepMind)

GPT Image 1.5 Goes Live for Iterative Editing

The Takeaway

👉 OpenAI released GPT Image 1.5, a new flagship image generation model powering ChatGPT Images.

👉 The model emphasizes stronger instruction-following and precise edits, aiming to change only what the user requests while preserving lighting, composition, and identity details.

👉 OpenAI says it delivers better detail preservation and is up to 4× faster than the previous experience.

👉 It’s rolling out in ChatGPT for all users and is also available in the API as gpt-image-1.5, with a new Images tab inside ChatGPT for a dedicated workflow

OpenAI just dropped GPT Image 1.5, a new flagship image generator that feels less like “make me a picture” and more like “keep my scene, now tweak the details.” The big promise: stronger instruction-following, precise edits, and better detail preservation - so when you ask for “change the jacket,” it doesn’t quietly rewrite the lighting, face, or composition along the way.

What’s new for builders and power users: it’s rolling out inside ChatGPT via a dedicated “Images” surface (sidebar tab), and it’s also available in the API as gpt-image-1.5. The speed bump matters too—OpenAI says it’s up to 4× faster—turning iterative design into something you can actually do in real time, like adjusting product mockups, marketing creatives, or consistent character variations across a series.

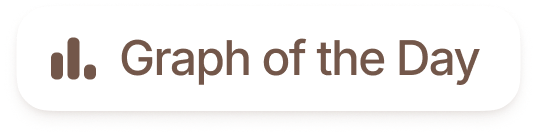

Nevertheless, a bitter aftertaste remains. The real surprise was that the image quality itself hasn't actually improved. It seems that Google has secured the victory for now with Nano Banana Pro – and OpenAI has little interest in fighting for first place again.

Why it matters: This is image generation shifting from novelty to workflow, fewer “regen roulette” cycles, more reliable creative control. It is surprising, however, that they are not trying to surpass Google's Nano Banana Pro in quality.

Sources:

🔗 https://openai.com/index/new-chatgpt-images-is-here/

Turn AI Into Your Income Stream

The AI economy is booming, and smart entrepreneurs are already profiting. Subscribe to Mindstream and get instant access to 200+ proven strategies to monetize AI tools like ChatGPT, Midjourney, and more. From content creation to automation services, discover actionable ways to build your AI-powered income. No coding required, just practical strategies that work.

FrontierScience Raises the Science Bar

Science is getting a new scoreboard - and it’s brutal. OpenAI just introduced FrontierScience, a benchmark built by Olympiad medalists and PhD scientists to test whether AI can do the kind of multi-step reasoning real research demands (not just pick A/B/C). It spans physics, chemistry, and biology, with two tracks: Olympiad-style problems and open-ended research subtasks graded by a rubric.

Early results are telling: GPT-5.2 leads the pack, hitting 77% on the Olympiad track, but only 25% on the Research track - great at structured puzzles, still shaky at messy discovery. To see how fast this is moving, OpenAI notes that on GPQA (a “Google-proof” PhD-level science benchmark) GPT-4 scored 39% in 2023, while GPT-5.2 now reaches 92%.

That gap is the point: it maps where “Pro-tier” models feel like a turbocharged lab partner today, and where they still need guardrails. Zoom out and the strategy snaps into focus: better science reasoning, plus better retrieval. ChatGPT search already blends conversation with linked sources; the next step is turning “search” into an autonomous research workflow. When your model can read, compare, and synthesize papers, the browser starts to look like a lab bench.

FrontierScience gives the industry a tougher, more realistic yardstick for “research-grade” AI - so progress becomes measurable, not vibes. As scores climb, expect AI search to evolve from “find links” into “run the literature workflow,” compressing weeks of reading into hours.