In Today’s Issue:

💰 OpenAI’s Compute Margin rockets to 70%

💼 Goldman Sachs warns Gen Z of a junior "jobpocalypse”

⏳ Claude Opus 4.5 sets a new world record for sustained reasoning

🔦 Photonic AI Chips from China challenge the silicon status quo

✨ And more AI goodness…

Dear Readers,

Today we’re stepping straight into the beam of light-powered AI, where China’s photonic chips promise to run generative models at blistering speed and far lower cost - hinting at a future where Nvidia is no longer the only game in town. In this issue, we connect that hardware shock to the human layer: Goldman Sachs telling Gen Z to prove their commercial impact instead of just collecting degrees, Claude Opus 4.5 stretching the time horizon for long-running tasks, and OpenAI both squeezing more profit out of compute and chasing a jaw-dropping $830B valuation. Threaded through all of it is one question: in a world of light-speed chips, ruthless efficiency drives, and mega-rounds that look more like sovereign budgets, how do you position yourself - not just as a spectator of the AI economy, but as someone who actually benefits from the next wave?

All the best,

Goldman Warns Gen Z: Differentiate

Goldman Sachs executives argue that AI isn’t eliminating all banking jobs, but it is turning graduate hiring into a “jobpocalypse” where only people who clearly show their commercial impact and value survive in a tougher, low-growth environment. The core message for Gen Z: don’t just stack degrees and technical skills - be able to explain exactly how your work makes money, reduces risk, or strengthens client relationships, and double down on human skills like communication, judgment, and collaboration that AI can’t easily replace. If you can quantify your impact and pair it with real people skills, AI shifts from being a competitor to a force multiplier for your career.

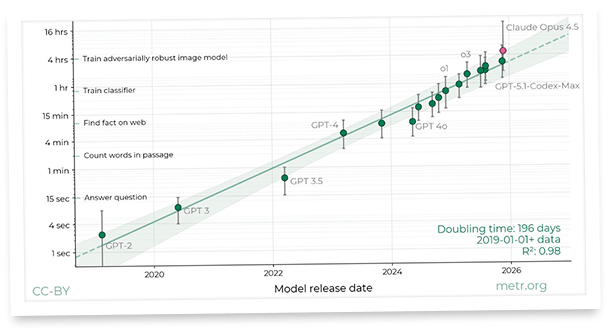

Claude Opus Pushes Long-Task Boundaries

METR reports that Claude Opus 4.5 achieves a 50% time horizon of ~4h 49m (CI: 1h 49m–20h 25m), the highest they’ve published so far, although they caution the upper bound is inflated due to limited long-duration tasks. Interestingly, its 80% time horizon is only 27 minutes (below GPT-5.1 Codex Max’s 32 mins), showing Opus can occasionally nail very long tasks but isn’t consistently reliable yet. Big picture: AI is getting dramatically better at sustained reasoning and long workflows—this is a meaningful step toward more autonomous, durable AI agents that can actually stick with complex human-scale tasks.

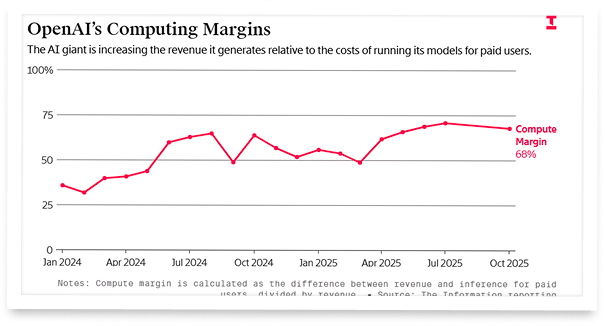

OpenAI’s Compute Margin Rockets Up

OpenAI’s internal numbers show its compute margin on paying users jumped from ~35% (Jan 2024) → ~52% (end 2024) → ~70% (Oct 2025), after a “code red” push to cut inference costs and optimize model efficiency—crucial as it pitches investors on a potential $100B raise. Anthropic still looks set to be more efficient overall (including free users and training), projecting up to 68% compute margin and $60B in compute spend through 2028 versus OpenAI’s massive $220B, but OpenAI’s improving unit economics on paid plans give it more room to subsidize hundreds of millions of free users and experiment with ads, affiliates, and new product tiers. For humans, this matters because better compute efficiency is what keeps advanced AI getting cheaper, more accessible, and more widely deployed - instead of becoming a luxury tool locked behind sky-high prices.

China’s analogue AI chip could be 1,000 times faster than Nvidia GPU

Light-Speed Chips Challenge Nvidia

The Takeaway

👉 China is developing photonic AI chips that use light instead of electrons to run core neural network operations far faster and more efficiently than traditional GPUs on specific tasks.

👉 These light-based accelerators are specialized, not general-purpose: they work best when integrated into well-defined parts of gen-AI pipelines like image or video generation.

👉 If toolchains and cloud integrations catch up, photonic chips could significantly reduce the cost and energy footprint of large-scale AI inference.

👉 For builders, this signals a shift toward heterogeneous compute stacks, where GPUs, TPUs, and photonic accelerators each handle the workloads they’re best at.

What if the next “GPU moment” doesn’t come from more transistors - but from lasers? A new wave of Chinese photonic (light-based) AI chips is getting attention because it swaps electrons for photons, using optical interference to compute insanely fast while wasting far less energy as heat. The headline act is LightGen, described as an all-optical generative-AI chip with over two million photonic “neurons,” demonstrated on tasks like high-resolution image synthesis, style transfer, denoising, and video manipulation. In parallel, ACCEL blends photonics with analog electronics and has reported 4.6 peta-ops/s and very high energy efficiency for vision-style workloads.

The key nuance: this isn’t a drop-in replacement for Nvidia GPUs. Think “purpose-built analog engine” rather than “general programmable workhorse.” But if these architectures keep scaling, they could become the specialized accelerators that make certain gen-AI pipelines dramatically cheaper, faster, and greener. Are we about to see a future where your AI stack is half GPU, half “light engine”?

Why it matters: Photonic accelerators could slash the cost and power footprint of specific generative workloads, turning “expensive demos” into scalable products. If they mature, the bottleneck in AI may shift from raw compute supply to clever workload–hardware co-design.

Sources:

🔗 https://interestingengineering.com/science/china-light-ai-chips-faster-than-nvidia

🔗 https://www.science.org/doi/10.1126/science.adv7434

Turn AI Into Extra Income

You don’t need to be a coder to make AI work for you. Subscribe to Mindstream and get 200+ proven ideas showing how real people are using ChatGPT, Midjourney, and other tools to earn on the side.

From small wins to full-on ventures, this guide helps you turn AI skills into real results, without the overwhelm.

Last Time the Market Was This Expensive, Investors Waited 14 Years to Break Even

In 1999, the S&P 500 peaked. Then it took 14 years to gradually recover by 2013.

Today? Goldman Sachs sounds crazy forecasting 3% returns for 2024 to 2034.

But we’re currently seeing the highest price for the S&P 500 compared to earnings since the dot-com boom.

So, maybe that’s why they’re not alone; Vanguard projects about 5%.

In fact, now just about everything seems priced near all time highs. Equities, gold, crypto, etc.

But billionaires have long diversified a slice of their portfolios with one asset class that is poised to rebound.

It’s post war and contemporary art.

Sounds crazy, but over 70,000 investors have followed suit since 2019—with Masterworks.

You can invest in shares of artworks featuring Banksy, Basquiat, Picasso, and more.

24 exits later, results speak for themselves: net annualized returns like 14.6%, 17.6%, and 17.8%.*

My subscribers can skip the waitlist.

*Investing involves risk. Past performance is not indicative of future returns. Important Reg A disclosures: masterworks.com/cd.

OpenAI chases $830B valuation

OpenAI is targeting up to $100 billion in fresh financing, in a round that could value the company at as much as $830 billion. That’s not just another fundraising cycle; it’s an attempt to rewrite what a late-stage startup can look like, especially in a moment where the broader AI hype cycle is cooling and investors are suddenly asking tougher questions. The money would fuel massive compute expansion, new models, infrastructure build-outs, and the kind of breathtakingly expensive bets that only a handful of companies on earth can even attempt.

But this move is also a test. Can OpenAI still sell its grand vision when Wall Street is more cautious, when partners like Oracle and CoreWeave face market skepticism, and when even sovereign wealth funds may hesitate at the sheer scale of capital required? At the same time, SoftBank doubling down, Disney stepping in, and ongoing licensing deals show that powerful backers still believe OpenAI isn’t done reshaping the tech landscape. Whether this works or not may define the next phase of the AI economy.