In Today’s Issue:

🧬 DNA storage shrinks civilization-scale data into a coffee mug

🔋 Solid-state batteries push EV range and charge times into gas-car territory

🧠 Why most people still misunderstand how modern AI systems reason

✨ And more AI goodness…

Dear Readers,

Ho ho ho, tech friends! 🎅 This Christmas edition is stuffed like a digital stocking: up front, Poetiq’s ARC-AGI-2 record shows what happens when reasoning gets so cheap and powerful it starts to feel like magic - only it’s very real and very deployable. Then we glide over to DNA data storage turning a coffee mug–sized vial into a vault for civilization’s memories, and Samsung’s sleigh of solid-state batteries promising 600-mile range and 9-minute “pit-stop” charging for future EVs. Along the way, we unwrap a slightly worrying truth: most people still don’t really know how AI works, even as it shapes their lives - so we’re adding a thoughtful panel, some sharp visuals, and a few spicy rumours to help you see what’s really going on behind the glowing interface. If you want to know what’s hiding under the tree for the next decade of AI, energy, and infrastructure, this is the issue you’ll want to read right to the last line.

All the best,

DNA turns into data vault

Atlas Data Storage just unveiled Atlas Eon 100, a DNA-based archival service that can pack 60 petabytes of data into just 60 cubic inches - roughly a coffee mug - enough to store around 660,000 4K movies. The system is aimed at long-term archiving rather than everyday storage: synthetic DNA capsules can in principle preserve data for millennia without power or periodic refresh, while achieving around 1,000x the density of state-of-the-art LTO-10 magnetic tape. For humans, this is a big deal because our exploding AI datasets, cultural archives, and scientific records suddenly get a path to ultra-compact, ultra-durable “civilizational memory” that could outlive any current data center.

Samsung Unveils 9-Minute 600-Mile Batteries

Samsung announced it’s gearing up to produce solid-state EV batteries next year that could deliver about 600 miles of range and recharge in around 9 minutes, far exceeding typical lithium-ion performance. These batteries use safer solid electrolytes and are part of a partnership with BMW and Solid Power, with production expected in late 2026 for new vehicles.

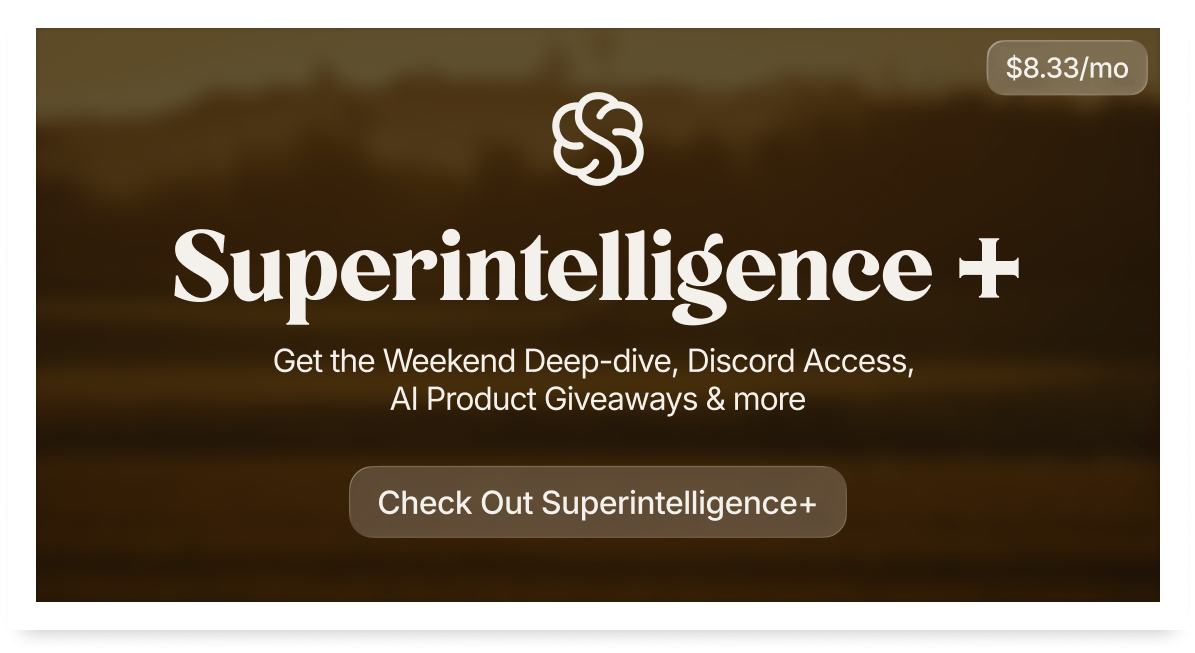

Public misunderstands how AI works

75% of Americans don't know how neural networks work. Most believe it simply looks up answers in a database (45%), while fewer understand that it actually predicts the next words based on learned patterns (28%), with others thinking it runs scripted replies (21%) or even has a human secretly writing responses (6%).

Our editor-in-chief Kim participated in a fascinating panel discussion with an AI professor and a CEO about the future of AI. Even though the discussion is in German, you can still learn a lot with the English subtitles.

Poetiq Smashes ARC-AGI-2 Record

The Takeaway

👉 Treat “scaffolding” as a product lever: better orchestration can unlock big gains without retraining a model.

👉 Track cost-per-solved-task, not just accuracy—$8/problem changes what’s deployable in production.

👉 Expect faster capability jumps as new frontier models drop into the same harness with minimal integration work.

👉 If public → semi-private transfer holds, update your agent roadmap: harder reasoning tasks may become commodity faster than planned.

ARC-AGI-2 is basically an “IQ test” for AI: tiny abstract grid puzzles that punish memorization and reward real pattern-finding. This week, Poetiq says it ran its existing reasoning “harness” on OpenAI’s GPT-5.2 X-High and hit up to 75% on the full PUBLIC-EVAL set - at under ~$8 per problem - with no training and no model-specific optimization.

That combo matters more than the headline score. It suggests the performance jump isn’t only “a better model,” but a better wrapper: orchestration, search, and test-time reasoning that can plug into new frontier models fast - like swapping an engine into the same race car chassis. If this transfer holds on ARC Prize’s more secure eval tiers, we’re watching the cost/performance curve for reasoning bend sharply downward—and that’s rocket fuel for agentic systems that must plan, debug, and adapt under budget.

So we saturated the next benchmark in a very short time. Overall, new benchmarks aren't emerging nearly as quickly as the old ones become saturated.

Why it matters: Cheap, high-accuracy abstract reasoning is a leading indicator for more reliable AI agents in the real world. It also shows how much “systems engineering around the model” can matter as much as the model itself.

What investment is rudimentary for billionaires but ‘revolutionary’ for 70,571+ investors entering 2026?

Imagine this. You open your phone to an alert. It says, “you spent $236,000,000 more this month than you did last month.”

If you were the top bidder at Sotheby’s fall auctions, it could be reality.

Sounds crazy, right? But when the ultra-wealthy spend staggering amounts on blue-chip art, it’s not just for decoration.

The scarcity of these treasured artworks has helped drive their prices, in exceptional cases, to thin-air heights, without moving in lockstep with other asset classes.

The contemporary and post war segments have even outpaced the S&P 500 overall since 1995.*

Now, over 70,000 people have invested $1.2 billion+ across 500 iconic artworks featuring Banksy, Basquiat, Picasso, and more.

How? You don’t need Medici money to invest in multimillion dollar artworks with Masterworks.

Thousands of members have gotten annualized net returns like 14.6%, 17.6%, and 17.8% from 26 sales to date.

*Based on Masterworks data. Past performance is not indicative of future returns. Important Reg A disclosures: masterworks.com/cd

Built-In LoRAs, Better Edits

Qwen just dropped Qwen-Image-Edit-2511, a sharper, more “production-minded” upgrade to its image-editing stack - and it’s aimed at one core pain: edits that don’t drift. If you’ve ever told a model “change the jacket” and watched the whole person morph, you know the problem. Qwen says 2511 mitigates that image drift, boosts character identity consistency (including multi-person scenes), and strengthens geometric reasoning - think cleaner spatial edits and even design-style construction lines.

The sneaky-big move: popular community LoRAs are integrated directly into the base model, so you can get effects like lighting enhancement or viewpoint generation without extra fine-tuning. Under the hood, Qwen-Image-Edit is built on the 20B Qwen-Image foundation and combines semantic control (what’s in the image) with appearance control (how it looks), which helps it edit content while keeping the “visual DNA” intact.

If this keeps holding up in real workflows, image editing starts feeling less like gambling - and more like tooling.

Reliable, low-drift editing is the difference between “cool demo” and “shipping asset pipeline.” Built-in LoRA capabilities also point to a future where community creativity becomes a native feature, not a separate integration project.

The Future of Shopping? AI + Actual Humans.

AI has changed how consumers shop, but people still drive decisions. Levanta’s research shows affiliate and creator content continues to influence conversions, plus it now shapes the product recommendations AI delivers. Affiliate marketing isn’t being replaced by AI, it’s being amplified.