Dear Readers,

DeepSeek didn’t just launch a new model, it kicked the door open. When an open-weights system suddenly performs in the same league as GPT-5 and Gemini-3, the center of gravity shifts, and you can feel that shift everywhere right now. This week’s issue starts with that jolt: the moment an open model stops being “the scrappy challenger” and becomes the benchmark everyone else has to react to. It’s a reminder of how quickly power can redistribute in AI, and how fast the ground moves under our feet.

From there, we jump into a cascade of developments shaped by the same pressure. OpenAI declares a “code red,” Chinese open weights quietly power U.S. startups, synthetic songs climb the charts, Mistral drops a frontier-level suite, Runway releases its most cinematic video model yet, and OpenAI’s “Garlic” hints at a new generation of efficient training. Each story amplifies the same question: what happens when breakthroughs no longer come from one direction? Let’s explore it together.

In Today’s Issue:

🧠 DeepSeek's new open-source AI is hitting GPT-5 level reasoning performance

🚀 Mistral 3 is bringing a full suite of optimized open-source AI models

🎬 Runway's new AI video model is setting new records

🧄 OpenAI is fighting back with "Garlic"

✨ And more AI goodness…

All the best,

🚨 OpenAI Hits Emergency Acceleration

Sam Altman has declared a “code red,” shifting OpenAI’s resources toward rapidly upgrading ChatGPT, shipping a new reasoning model that internally outperforms Google’s Gemini 3, improving speed, reliability, personalization, and image generation while delaying ads and other products. The move comes amid rising competitive pressure and slowing growth, with ChatGPT’s performance now tightly linked to OpenAI’s ability to secure the massive funding it needs for its next phase.

💻 Silicon Valley Rides Chinese Code

NBC’s piece shows how a growing wave of U.S. AI startups are quietly building on free, open-weight Chinese models like DeepSeek and Alibaba’s Qwen instead of paying for pricey U.S. APIs. The logic is simple: these models are now “good enough” for many production use cases, cheaper to run, and can be fully self-hosted, so founders optimize for runway rather than national origin. The twist is that America’s AI boom is increasingly sitting on foreign weights, raising hard questions about security, regulation, and how special the closed U.S. frontier labs really are.

🎧 AI-Generated Tracks Crash the Charts

Fully synthetic songs, with vocals, instruments, and even artist personas crafted by AI, are no longer fringe experiments, they’re now racking up millions of streams, topping charts, and widely accepted by listeners. A recent survey found that almost nobody can reliably tell AI-made music apart from human-created tracks, but many still feel uneasy about the shift. This moment feels like a turning point: AI-driven music isn’t just a novelty anymore, it’s becoming embedded into mainstream streaming culture

OpenAI's Research Chief Refuses To Lose . . . At Anything

The whale is back: DeepSeek-V3.2 & Speciale release!

The Takeaway

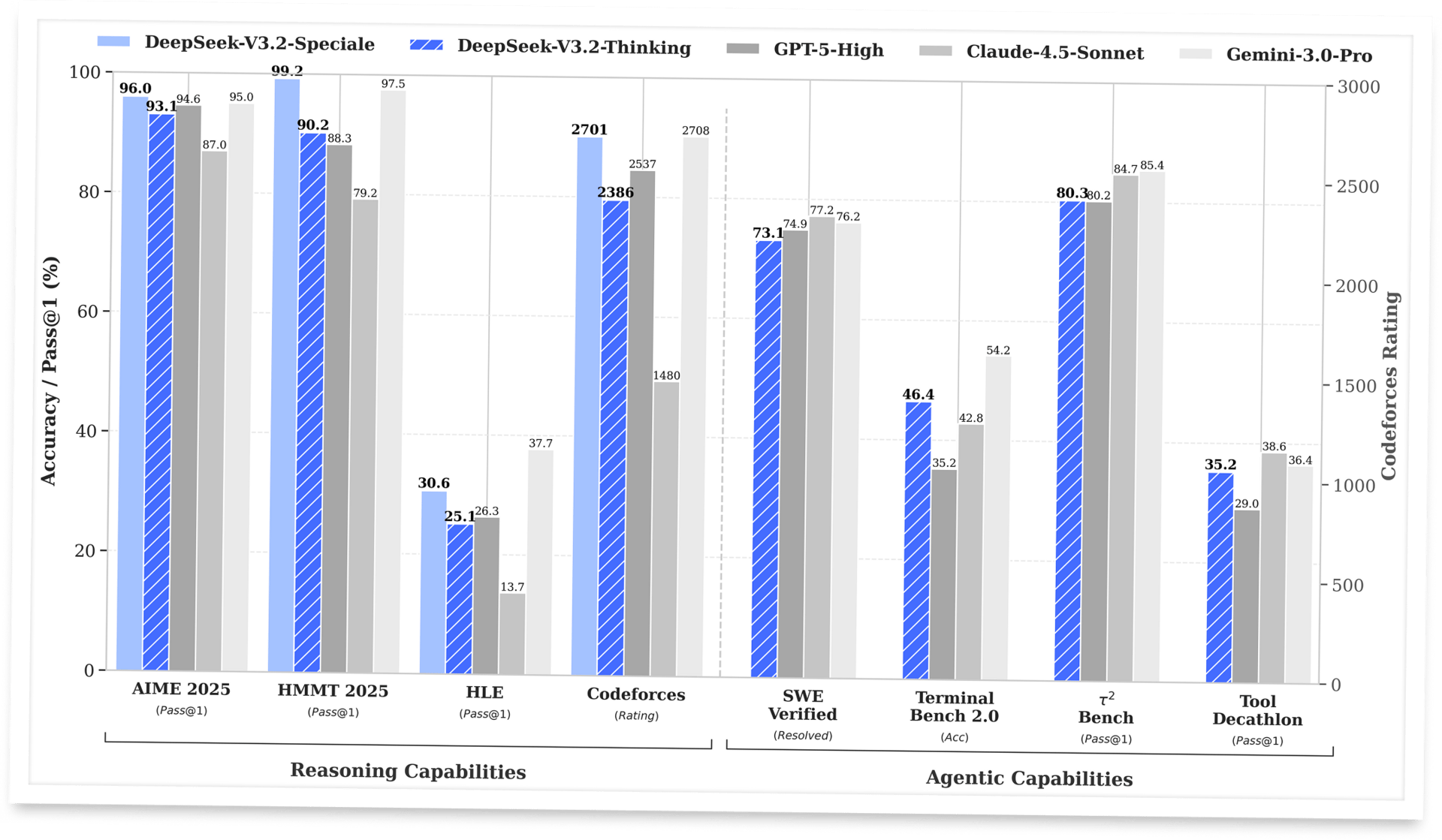

👉 DeepSeek-V3.2 delivers GPT-5-level reasoning in an open-weights model, making frontier-tier performance broadly accessible.

👉 The Speciale variant pushes even higher, outperforming major closed models on hard reasoning tasks like advanced math and code.

👉 Its sparse-attention architecture enables long-context performance at lower compute cost than traditional dense transformers.

👉 With 1,800+ agent-training environments, the model is explicitly optimized for tool use, multi-step reasoning and autonomous agent workflows.

An open model just walked onto the stage and started playing in the same league as GPT-5 and Gemini-3, and it’s not shy about it. DeepSeek-V3.2 is a “reasoning-first” model built for agents, with the Speciale variant cranking up compute to maximize hard-reasoning performance.

Under the hood, DeepSeek Sparse Attention trims the cost of long-context attention while keeping quality intact, so you can run very long prompts without burning through compute.

A scaled-up RL stack plus a massive agentic training pipeline, 1,800+ environments and 85k complex instructions, teaches the model to reason, use tools, and navigate interactive tasks instead of just predicting the next token. On benchmarks, V3.2 sits around GPT-5 territory, while Speciale reportedly edges past it on difficult reasoning workloads and hits gold-medal performance on IMO/IOI-style problems.

Because the weights are open on Hugging Face and the model is exposed via API (and even free chat), this isn’t just a leaderboard flex, it’s a new building block for anyone shipping agents, copilots, or automated research systems.

Why it matters: DeepSeek-V3.2/Speciale shows that frontier-level reasoning is no longer reserved for closed, ultra-expensive stacks. That shifts power toward builders: startups, researchers, and indie devs can now prototype serious agent systems on open infrastructure instead of waiting for the next big closed release

Sources:

🔗 https://huggingface.co/deepseek-ai/DeepSeek-V3.2

Run IRL ads as easily as PPC

AdQuick unlocks the benefits of Out Of Home (OOH) advertising in a way no one else has. Approaching the problem with eyes to performance, created for marketers with the engineering excellence you’ve come to expect for the internet.

Marketers agree OOH is one of the best ways for building brand awareness, reaching new customers, and reinforcing your brand message. It’s just been difficult to scale. But with AdQuick, you can plan, deploy and measure campaigns as easily as digital ads, making them a no-brainer to add to your team’s toolbox.

You can learn more at AdQuick.com

Mistral’s Next-Gen Open AI Suite

Mistral 3 introduces a full next-gen open-source family: three small dense “Ministral” models (3B, 8B, 14B) plus Mistral Large 3, a frontier-level sparse MoE with 41B active / 675B total parameters, trained on 3000 H200s and released under Apache 2.0. Large 3 hits state-of-the-art among open-weight instruction models, adds multimodal and multilingual capabilities, and is optimized with Nvidia (TensorRT-LLM, SGLang, Blackwell kernels) for efficient deployment from laptops to GB200 clusters. The Ministral models deliver best-in-class cost-to-performance, multimodality, and new reasoning variants, making this one of the strongest fully open AI releases to date.

Runway Gen-4.5 sets new record

Runway updated their new AI video model to Gen-4.5. It produces very realistic, cinematic video clips from text prompts, with convincing motion, physics, lighting, and detail that make objects, liquids, and characters behave believably, even outperforming Google's Veo 3 in benchmarks.

OpenAI releases “Garlic” early next year

OpenAI’s new “Garlic” model delivers major pretraining breakthroughs, letting the company pack big-model knowledge into much smaller architectures that still outperform Google’s Gemini 3 and Anthropic Opus 4.5 in internal coding and reasoning tests. Garlic fixes structural issues from earlier models like GPT-4.5 and could launch as GPT-5.2/5.5 early next year, marking OpenAI’s attempt to regain momentum after Altman’s “code red.” The model isn’t finished yet, but if evaluations translate to real-world performance, it signals that scaling efficiency isn’t plateauing after all

Moosend: Craft Emails That Convert

Stand out with personalized campaigns that build loyal customers. Grow your audience and scale your business effortlessly.