Dear Readers,

What if one person’s life story captured the entire tension of the AI age - between wild ambition and genuine fear of losing control? Today’s DeepDive follows Ilya Sutskever from Soviet Gorky to Geoffrey Hinton’s lab, through AlexNet, seq2seq and GPT, into the chaos of the Sam Altman coup and finally to his new moonshot, Safe Superintelligence Inc., where he wants to build one thing only: safe superintelligence. Alongside that, you’ll get a sharp look at what SSI is really trying to do over the next five years, why “reasoning models” like o1 change the risk landscape, and how a new generation of labs might reshape who actually steers this technology. If you want to understand not just what Ilya saw, but what he might build next - and what it means for all of us - this is the issue you don’t want to skim.

Oh, and if you want to discuss this DeepDive with the community, join our Discord: https://discord.gg/jFEpMAq8

All the best,

Kim Isenberg

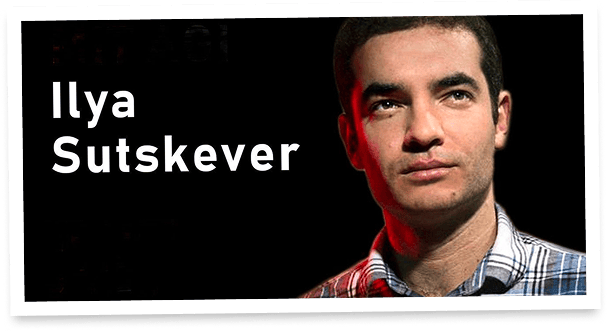

The life of Ilya Sutskever

“In a nutshell, I had the realization that if you train, a large neural network on a large and a deep neural network on a big enough dataset that specifies some complicated task that people do, such as vision, then you will succeed necessarily. And the logic for it was irreducible; we know that the human brain can solve these tasks and can solve them quickly. And the human brain is just a neural network with slow neurons. So, then we just need to take a smaller but related neural network and train it on the data. And the best neural network inside the computer will be related to the neural network that we have in our brains that performs this task.”

— Ilya Sutskever

Subscribe to Superintel+ to read the rest.

Become a paying subscriber of Superintel+ to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- Discord Server Access

- Participate in Giveaways

- Saturday Al research Edition Access