Dear Readers,

Today is Research Wednesday, as always. So, we summarized the hottest AI papers of the past week for you! Also: Sakana AI presents the Continuous Thought Machine! A big breakthrough.

But before we get to today's main article, after yesterday's interview we would like to refer to Hashem Al-Ghaili's latest video, which he uploaded today. 100% AI generated! He wrote about it:

"I’m happy to share my new AI-assisted short film, “The Colorless Man.” This film took 2 weeks to complete during my free time, with a budget of $600 USD. I used various AI tools to explore how far AI-assisted film production has come. Based on estimates from other producers and filmmakers, a film like this would typically cost between $300K and $500K without AI, depending on the scale of production. It would also require around 70 people and at least 2 months of work. Thanks to AI, this was reduced to just 1 person, $600 USD, and 2 weeks of non-continuous work. First, I wrote the story and screenplay, then I used various AI tools to turn my script into visuals. I used ChatGPT, MidJourney, and Dreamina for images; Kling AI for videos; ElevenLabs for voices; Dreamina AI for lip sync; Suno AI for music; and MMAudio or ElevenLabs for sound effects."

— Hashem Al-Ghaili

In Today’s Issue:

SakanaAI designs an AI that thinks like a human brain

New discovery shows nanotubes can glow brighter when heated

Microsoft adds real-time screen understanding to Windows Copilot

Scientists observe a molecule shutting down heat at room temperature

And more AI goodness

All the best,

Sakana AI presents the Continuous Thought Machine

The TLDR

Japanese startup Sakana AI has unveiled the Continuous Thought Machine, a new AI model that solves problems by thinking sequentially rather than all at once. Inspired by the brain’s temporal dynamics, it offers more explainable and energy-efficient decision-making, marking a potential paradigm shift in how AI “thinks.”

Imagine an AI that doesn't just answer at lightning speed, but takes time to think about a problem - step by step, just like a human. This is exactly what the Japanese start-up Sakana AI has developed with its Continuous Thought Machine (CTM).

In contrast to conventional neural networks, which process information in one go, the CTM uses the synchronization of neurons over time. This temporal dynamic enables the model to handle complex tasks such as solving mazes or classifying images not only more efficiently but also more comprehensibly. The CTM actively “looks” at different areas of the image, similar to the human eye, and makes decisions based on these focused observations.

This is a significant step for the AI community: CTM brings us closer to an artificial intelligence that is not only powerful, but also interpretable and energy-efficient. It shows that new horizons can be opened up by integrating biological principles into AI models.

Could this be the beginning of a new era in which AI not only thinks faster, but also deeper?

Why it matters: The Continuous Thought Machine from Sakana AI demonstrates how temporal dynamics and neural synchronization can make AI systems not only more powerful, but also more human. It paves the way for an AI that thinks like us - only faster and more precisely.

Ad

🚨 Want to become famous(er), grab more customers, and 100X your reach?

Stop burning budget on ads and hoping for clicks. Podcast listeners lean in, hang on every word, and buy from guests who deliver real value. But appearing on dozens of incredible podcasts overnight as a guest has been impossible to all but the most famous.

PodPitch.com is the NEW software that books you as a guest (over and over!) on the exact kind of podcasts you want to appear on – automatically.

⚡ Drop your LinkedIn URL into PodPitch.

🤖 Scans 4 Million Podcasts: PodPitch.com's engine crawls every active show to surface your perfect podcast matches in seconds.

🔄 Listens to them For You: PodPitch literally listens to podcasts for you to think about how to best get the host's attention for your targets.

📈 Writes Emails, Sends, And Follows Up Until Booked: PodPitch.com writes hyper-personalized pitches, sends them from your email address, and will keep following up until you're booked.

👉 Want to go on 7+ podcasts every month? Book a demo now and we'll show you what podcasts YOU can guest on ASAP:

In The News

Glowing Against the Rules: Carbon Nanotubes Defy Physics

Japanese researchers have discovered that carbon nanotubes can emit light with more energy than they absorb—something long thought impossible. This phenomenon, called up-conversion photoluminescence, is powered by tiny atomic vibrations known as phonons that boost electron energy. Even stranger, heating the nanotubes increases their glow, defying the typical behavior of overheating tech. This breakthrough could revolutionize solar energy, optical cooling, and light-powered devices.

Copilot Gets Eyes—For Free

Microsoft’s latest Copilot update brings real-time screen vision and interaction to all Windows users, no subscription required. Just open Copilot, click the vision icon, and get instant AI help with whatever’s on your screen—like ChatGPT with eyes, built into your laptop.

Molecule Silences Heat with Physics Trick

Scientists have observed a single molecule canceling its own heat flow through destructive interference—where atomic vibrations clash and vanish. This breakthrough could revolutionize cooling in electronics and lead to smarter heat management at the molecular level.

Graph of the Day

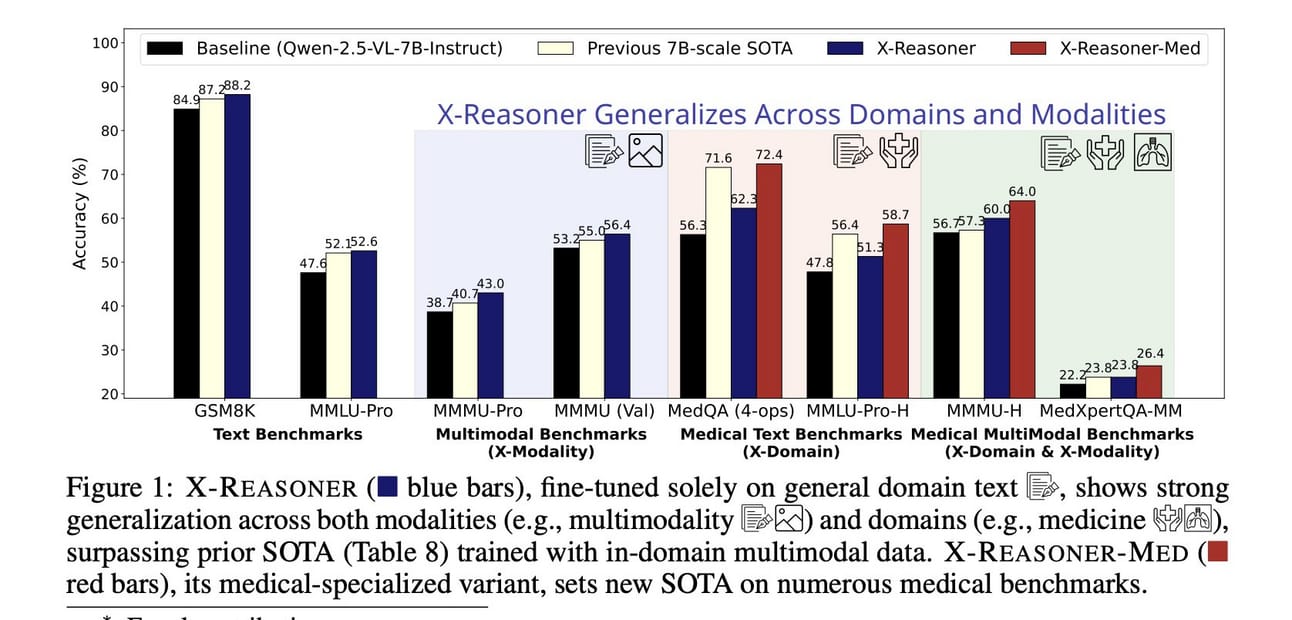

Absolute Zero: Reinforced Self-play Reasoning with Zero Data

Researchers introduce “Absolute Zero”: An AI called the “Absolute Zero Reasoner” (AZR) learns complex tasks such as programming and mathematics by setting itself problems and solving them – without any external training data. The novelty: Surprisingly, AZR outperforms models that have been trained with huge, human-curated data sets. This could break the data bottleneck for AI and enable more autonomous systems that transform technology and society.

Rewriting Pre-Training Data Boosts LLMPerformance in Math and Code

Quality over quantity in AI training data: Researchers show that rewriting code and math datasets instead of just filtering them dramatically improves the performance of AI models. The new “SwallowCode” and “SwallowMath” datasets, enhanced by AI, significantly increase capabilities. This “transform and retain” approach is a breakthrough for more specialized and powerful AI, as it maximizes the value of existing data.

All Roads Lead to Likelihood: The Value of Reinforcement Learning in Fine-Tuning

Researchers are investigating why reinforcement learning (RL) often works better than direct optimization (maximum likelihood estimation) when fine-tuning language models. The core idea is that RL has an advantage in tasks with a “generation-verification gap,” where it is easier to verify a solution than to generate one. It learns a simpler “verifier” model and then optimizes the “generator” AI based on it. This is often more efficient than training the complex generation AI directly and leads to better results despite a theoretical loss of information.

Question of the Day

Quote of the Day