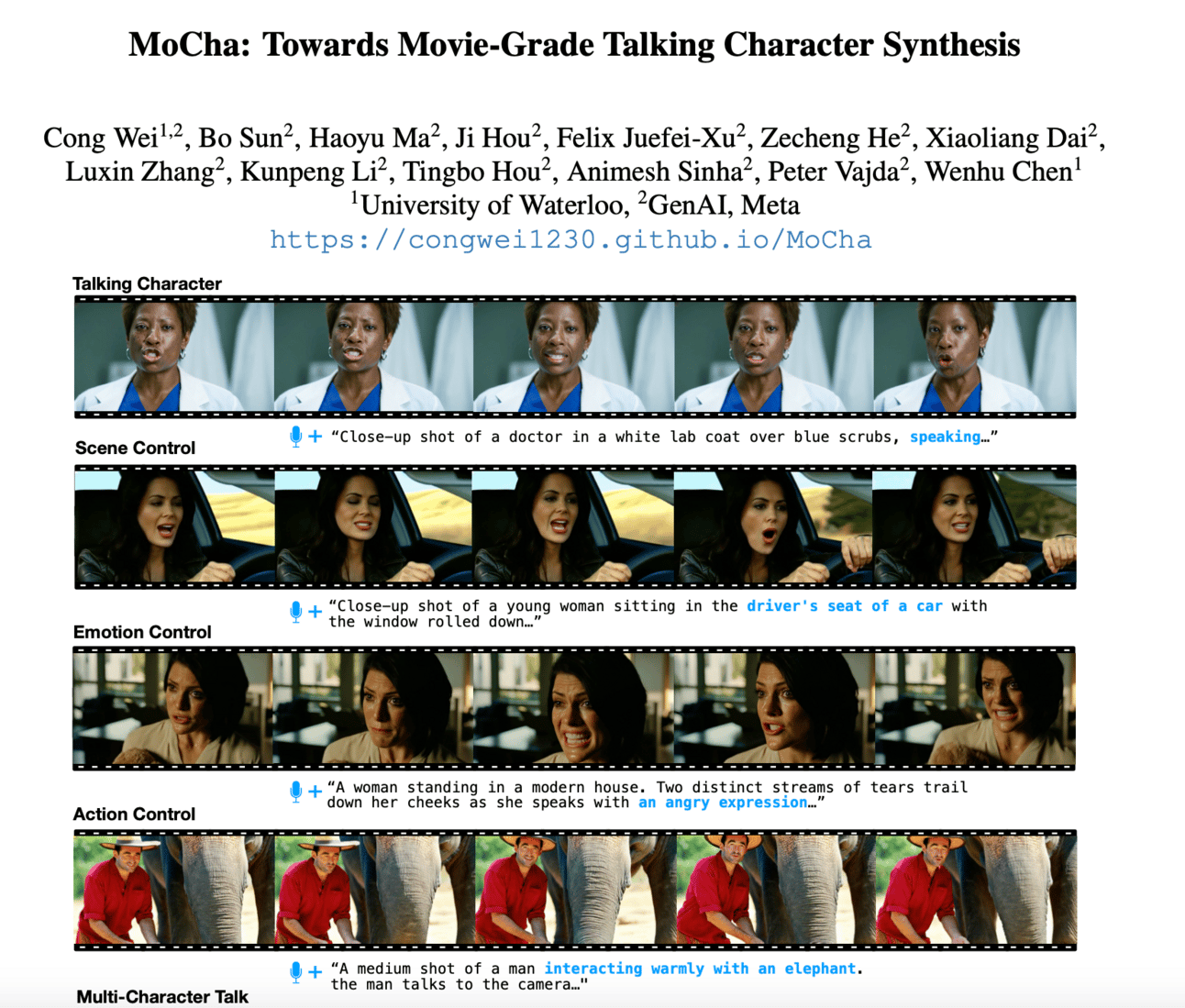

Metas MoCha Solves AI Lipsync Once And For All!

The TLDR

Meta and University of Waterloo unveil MoCha, a 30-billion parameter system for synchronized lip movements and natural body language. The technology renders full character animations from multiple angles. Speech-Video Window Attention solves previous limitations in AI video generation.

Imagine AI-generated movie characters that not only speak, but also gesture and interact authentically - Meta and the Unversity of Waterloo are making this a reality with the new “MoCha” system!

Meta and researchers at the University of Waterloo have developed a groundbreaking AI system that perfects synchronized lip movements and natural body language. Unlike previous models that focused only on faces, MoCha can render full character animations from multiple camera angles - including precise lip synchronization and interactions between multiple characters.

At the heart of this innovation is the “Speech-Video Window Attention” mechanism, which solves two persistent problems in AI video generation: video compression during processing and mismatched lip movements. MoCha limits each frame's access to a specific window of audio data, similar to how human speech works - lip movements follow immediate sounds, while body language corresponds to broader text patterns.

With 30 billion parameters and the ability to generate HD video clips of around five seconds, MoCha outperforms existing systems in lip-syncing and natural movements. Will we soon see complete, AI-generated movies? The future of digital filmmaking is at an exciting turning point!

Question of the Day

#Ad

Want a byte-sized version of Hacker News? Try TLDR’s free daily newsletter.

TLDR covers the most interesting tech, science, and coding news in just 5 minutes.

Chart of the Day

“We evaluated it [Gemini 2.5 Pro] on GPQA Diamond, and found a score of 84%, exactly matching the result reported by Google. This is the best result we have found on this benchmark to date.” (Epoch.ai)

AI Research

Inference-Time Scaling for Generalist Reward Modeling

DeepSeek-AI researchers present DeepSeek-GRM, a novel approach for AI reward models that delivers significantly better scores for AI responses through smart training (SPCT) and increased computational effort at runtime, scaling more efficiently than previous methods

The problem: In order to make AIs (such as LLMs) better and safer, “AI evaluators” (reward models, RMs) are often used to distinguish good from bad behavior. Previously, these evaluators were made better by training them to be larger (expensive!) or they were only good for very specific tasks.

New training approach (SPCT): This paper introduces “Self-Principled Critique Tuning” (SPCT). It is a new method that teaches the AI evaluator to dynamically develop and apply its own evaluation principles, depending on the request. This makes it more flexible and better suited to a wide range of general tasks.

The breakthrough - efficient scaling at runtime: Instead of just relying on more training, the same AI evaluator (DeepSeek-GRM) becomes significantly better at evaluation time (inference time) if it is given more computing time. It generates several evaluation approaches and combines them intelligently (voting or meta-RM), which leads to more precise judgments.

Efficiency & performance: The key breakthrough is that this approach shows that a smaller model can achieve or even exceed the performance of a much larger, more elaborately trained model with more computing time at runtime. This is potentially a much more efficient way to increase performance.

Importance to society: Better and more efficient AI evaluators are key to developing more trustworthy, safe and human-centric AI systems. This approach can help make AIs more reliable, which is hugely important for their use in all areas of life - from education to creativity to critical applications.

In The News

Gemini Adds Deep Research

Google powers Deep Research with Gemini 2.5 Pro, enhancing information synthesis and analytical capabilities. Advanced users receive improved report generation across all platforms.

Amazon Launches Nova Sonic Model

Amazon launches Nova Sonic, a speech-to-speech model that preserves tone, style and conversation flow. The unified system simplifies natural voice application development.

Quote of the Day

Hi All,

Thank you for reading. We would be delighted if you shared the newsletter with your friends! We look forward to expanding the newsletter in the future with even more specialized topics. Until then, follow us on social media to stay up to date.

Cheers,

Dan