In Today’s Issue:

🔎 Google launches the Gemini Deep Research API

🏛️ The White House plans an executive order to preempt state AI laws

🏰 Disney invests $1 billion in OpenAI and partners to use Sora

💡 Microsoft predicts seven major AI shifts for 2026

✨ And more AI goodness…

Dear Readers,

Is the U.S. quietly flipping the switch on a new AI era? Today’s first story dives into a White House executive order designed to replace fragmented state AI rules with a single federal framework, less friction, more speed, and a clear signal that Washington wants AI to scale fast. From there, this issue traces where momentum is building: Disney’s $1B bet on OpenAI and Sora-powered fan videos, Microsoft’s vision of AI agents as secure digital coworkers by 2026, Google turning Deep Research into an API for real investigative workflows, and Jerome Powell hinting that AI may already be nudging the labor market, dive in, because this week the future feels less theoretical and far more real.

All the best,

🇺🇸 White House executive order “Eliminating State Law Obstruction of National Artificial Intelligence Policy”

NATIONAL POLICY FRAMEWORK FOR ARTIFICIAL INTELLIGENCE: The order directs the federal government to establish a unified national AI policy that prevents individual state laws from creating a patchwork of different regulations seen as burdensome for AI innovation and interstate commerce. It creates a federal task force to chawallenge state AI laws that conflict with this national framework and calls for legislative recommendations to preempt conflicting state regulations while maintaining certain protections like child safety. The USA are going all in AI.

🧸 Disney Invests $1B in OpenAI

Disney is making one of Hollywood’s biggest moves yet into generative AI, announcing a $1 billion investment in OpenAI alongside a three-year licensing partnership that will let users create short videos in Sora featuring more than 200 characters across Disney, Pixar, Marvel, and Star Wars. Under the deal, fans will be able to generate and share “user-prompted” short-form clips built from Disney’s character library — including not just characters, but also related props, vehicles, costumes, and environments — while Disney plans to make a curated selection of these fan-made Sora videos available to stream on Disney+ starting in 2026.

💻 Seven AI shifts for 2026

Microsoft argues 2026 is when AI shifts from “answering” to collaborating—with agents acting like digital coworkers that help small teams punch above their weight. The catch: agents need human-grade security (clear identity + tightly scoped access) and AI will push into real-world healthcare, with Microsoft citing a WHO-projected 11M worker shortage leaving 4.5B people without essential services—and pointing to MAI-DxO at 85.5% on complex cases vs 20% for experienced physicians (plus 50M+ daily health questions answered via Copilot/Bing). On the build/breakthrough side: smarter “AI superfactories,” repository intelligence as GitHub hits 43M merged PRs/month (+23%) and 1B commits (+25%), and hybrid quantum+AI where Microsoft says quantum advantage is “years, not decades,” highlighting Majorana 1 and a path toward millions of qubits on a chip.

The Macro Force of the Decade: How AI, Energy & Geopolitics Are Merging w/ Kim Isenberg

Google is taking another leap into Deep Research

The Takeaway

👉 Google is bringing Gemini Deep Research to developers via an API, so apps can run multi-step web research (plan → browse → synthesize) instead of just chatting.

👉 The launch is paired with DeepSearchQA, an open benchmark designed to test whether research agents are thorough and evidence-driven, not just good at sounding confident.

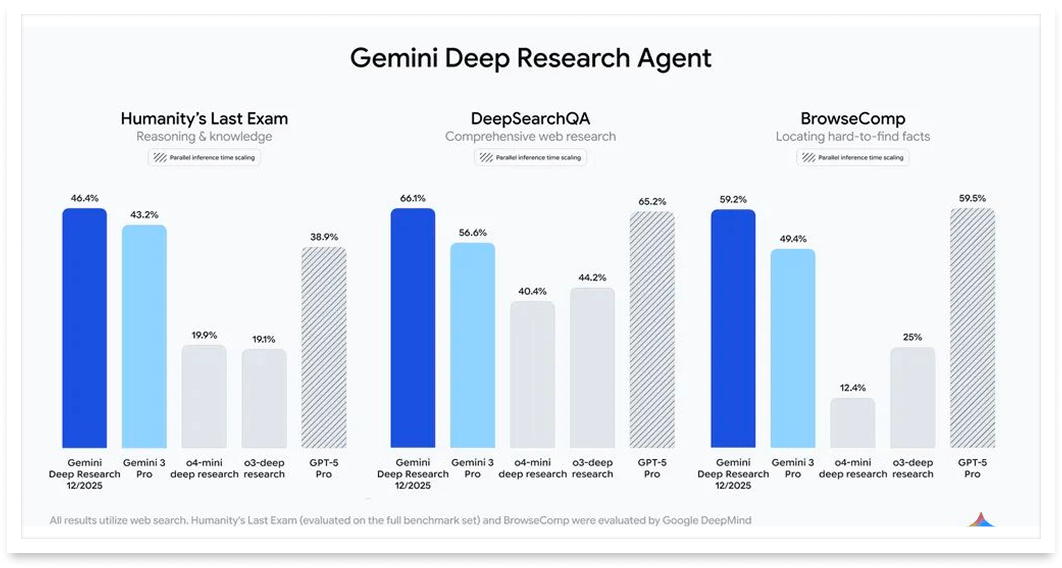

👉 Google reports strong benchmark results for the research agent (including BrowseComp and Humanity’s Last Exam) and positions Gemini 3 Pro as its most factual model for long-form investigation.

👉 Deep Research is also slated to roll into more Google products (like Search and NotebookLM), signaling a broader shift from “answer bots” to research workflows built into everyday tools.

Google just turned “research” into an API call. Gemini Deep Research - Google’s long-horizon web-research agent - is now available to developers via the new Interactions API, letting you embed an autonomous “analyst-in-a-box” into your app: it plans, searches, reads, finds gaps, then dives deeper into sites for the missing data.

Google says the agent is powered by Gemini 3 Pro (positioned as its most factual model) and tuned to reduce hallucinations during complex, multi-step work. The companion drop is DeepSearchQA, an open-sourced benchmark with 900 hand-crafted “causal chain” tasks across 17 fields that tests whether agents are comprehensive, not just lucky. On evals, Google reports 46.4% on Humanity’s Last Exam, 66.1% on DeepSearchQA, and 59.2% on OpenAI’s BrowseComp—while targeting well-researched reports at lower cost. Google says Deep Research will soon land in Google Search, NotebookLM, Google Finance, and an upgraded Gemini app.

If your product depends on “what’s true on the internet right now,” this is the shift from chatbots to workflows, research as a native feature.

Why it matters: We’re moving from “LLMs that talk” to “agents that investigate,” which is a huge unlock for builders shipping real decision-support products. The teams that win will treat research like an asynchronous pipeline (not a single prompt) and measure it like a product.

Sources:

🔗 https://blog.google/technology/developers/deep-research-agent-gemini-api/

Bring Ideas to Life in 3D: Fast, Free & AI-Powered with Hitem3D.ai

Turn text or images into 3D assets fast with Hitem3D.ai. No complex tools: export OBJ/FBX/GLB, auto texture & refine with AI. Create game, VR/AR, animation models in minutes. Start free and bring ideas to life!

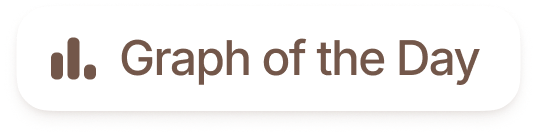

GPT-5.2 has roughly 30-40% less hallucination. That’s big and i love it!

AI Enters Fed’s Job Story

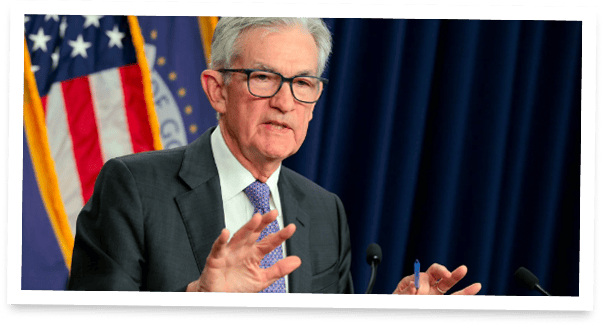

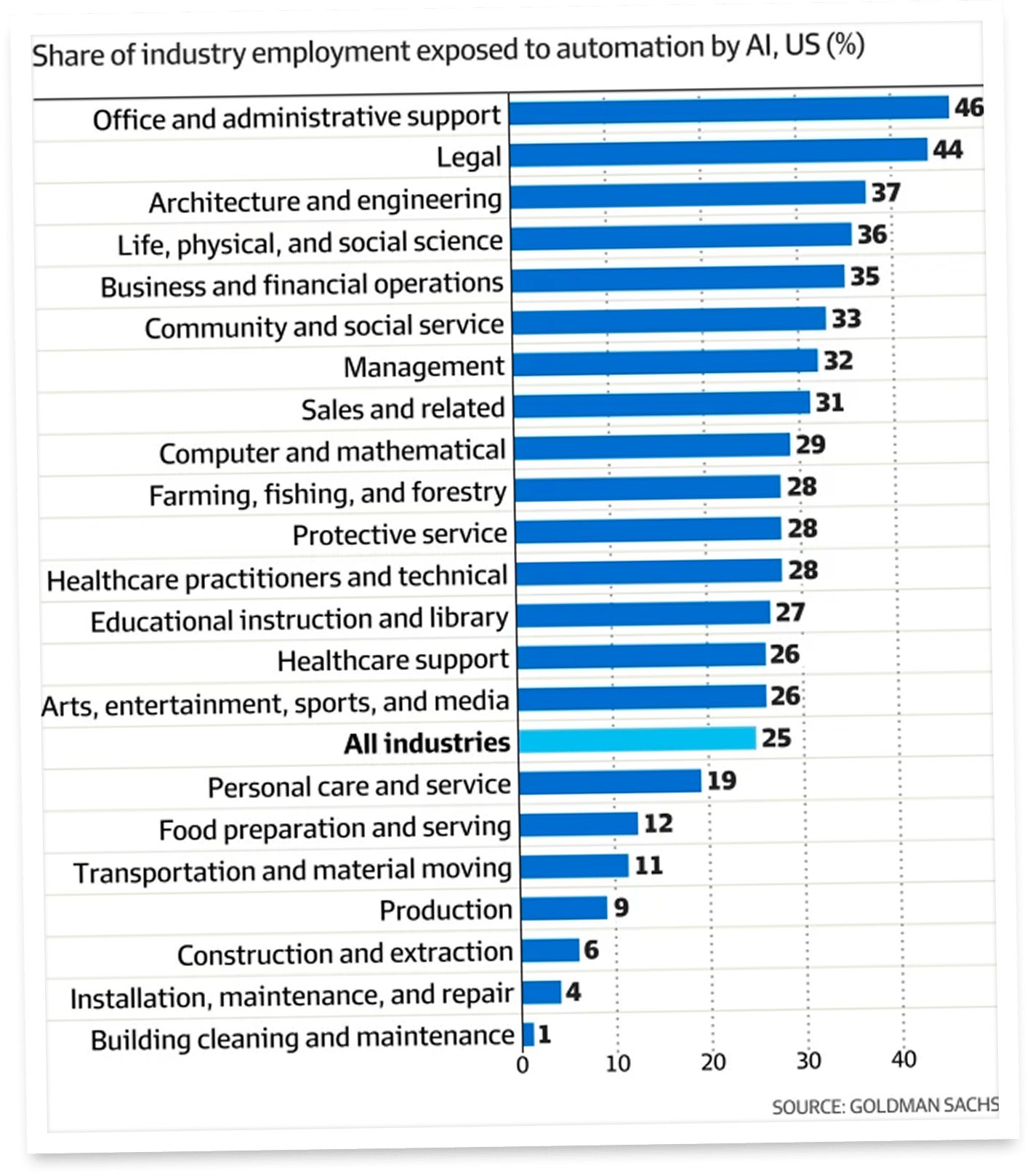

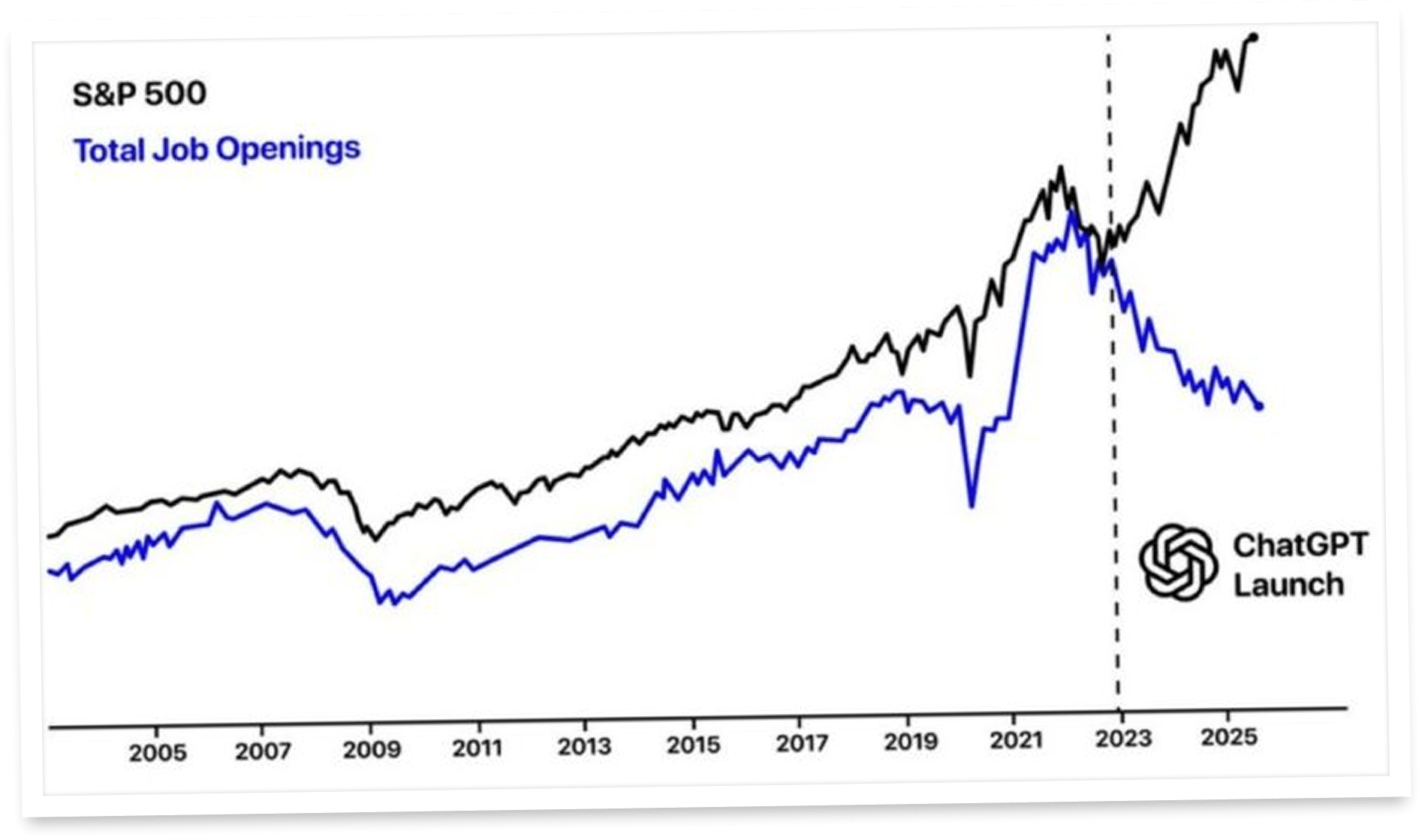

When the Fed chair starts talking about AI and unemployment in the same breath, you know the macro conversation has shifted. At his December press conference, Jerome Powell said AI is “probably part of the story” behind a softening U.S. labor market—but “not a big part…yet,” stressing that it’s still early days and the signal hasn’t cleanly shown up in unemployment-insurance claims. That’s the tension: companies keep announcing layoffs or slower hiring and name-checking AI, while the broad data stays oddly muted.

Powell also sketched the upside. He pointed to several years of stronger productivity and argued that investment tied to AI - especially data centers - has been supporting business spending. In other words, AI can be a growth engine even while it quietly rearranges who gets hired.

Then came the uncomfortable footnote: AI may raise output per hour while pushing some workers to “find other jobs,” and central banks don’t really have tools for the social labor-market fallout.

Overall, this is just the beginning. And so far, nobody knows how things will develop. But what we must learn from this is that we now need to have a much more fundamental discussion.

Sources:

🔗 https://www.theinformation.com/articles/job-market-worsening-ai-part-story-fed-chair-says?rc=bfliih

🔗 https://www.thestreet.com/fed/fed-chair-jerome-powell-credits-automation-and-ai-for-this-exciting-structural-boom-in-the-economy