Dear Readers,

Today is Wednesday and there was a lot going on yesterday: the Google i/o made a lasting impression.

A firework display of releases, countless products and improvements, a cascade of groundbreaking innovations! A huge victory compared to last year.

Find out exactly what was shown below.

In Today’s Issue:

Google I/O sets off AI fireworks with Gemini 2.5, Veo 3, and Imagen 4

NVIDIA reveals bold roadmap for physical AI, robotics, and national-scale AI factories

AI now diagnoses X-rays instantly—“no professional eyes needed”

Microsoft launches Agent Factory, NLWeb, and Copilot Tuning to shape the agentic web

And more AI goodness…

All the best,

Google i/o was a firework of releases!

The TLDR

At Google I/O 2025, the tech giant unleashed a wave of AI innovations: Gemini 2.5 Pro introduces multi-threaded reasoning for complex problems, while its faster sibling, Gemini 2.5 Flash, offers rapid, cost-effective responses. Creatives get a boost from Veo 3’s video generation with ambient sound and dialogue, and Imagen 4 delivers typo-free, high-res images. All tools are live in AI Studio and Vertex AI. With Gemini integrating into Android and XR Glasses, Google positions itself as a front-runner in everyday AI.

What a furious evening! No sooner had the curtain fallen than Google set off AI fireworks at I/O 2025 that made developers' hearts beat faster. With Gemini 2.5 Pro, the community gets a deep-think mode for the first time, which tracks several thinking paths in parallel and thus solves tricky math or code problems with ease.

If you need speed, go for Gemini 2.5 Flash, its turbo brother, which delivers answers at lightning speed and at low cost thanks to efficiency tweaks.

Creatives rejoice over Veo 3: The video model now not only generates photorealistic scenes, but also mixes authentic ambient sounds and dialog into the clip for the first time - storyboarding in one go.

At the same time, Imagen 4 takes to the stage, renders images in 2K resolution and writes texts so flawlessly that memes and posters can finally be created without embarrassing typos

All of this is already in AI Studio and Vertex AI - if you are keen to experiment, you can get started today and present productive prototypes next week. Google has also shown how it wants to integrate Gemini deeply into its own Android ecosystem. With the XR Glasses, Gemini will become our everyday visual companion, checking emails and calendars and proactively suggesting solutions.

Why it matters: Google has shown that DeepMind's research directly results in useful tools that we can integrate into our everyday lives. Google has caught up - and is now leading the race for AI.

Ad

Get the tools, gain a teammate

Impress clients with online proposals, contracts, and payments.

Simplify your workload, workflow, and workweek with AI.

Get the behind-the-scenes business partner you deserve.

In The News

NVIDIA Unveils Bold Roadmap for Physical AI, Robotics & AI Factories

NVIDIA has just raised the bar for AI and robotics with a sweeping set of announcements. The new DeepSeek R1 is now 4x faster, revolutionizing AI inference and reasoning. In a leap toward general-purpose robotics, NVIDIA introduced Isaac GR00T N1.5, a foundation model that learns tasks from a single demo using simulated training via Omniverse. An open-source physics engine, co-developed with Disney and DeepMind, is set to launch in July—bringing GPU-accelerated, real-time training for robots and drones. Finally, the Grace Blackwell AI superchip delivers 40 PFLOPS per node and is already powering next-gen infrastructure at major AI firms.

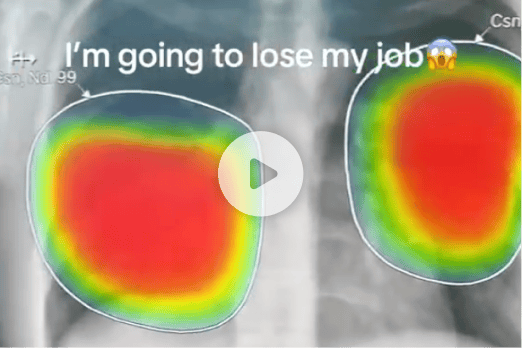

AI Revolutionizes X-Ray Diagnostics

AI can now accurately analyze X-rays and detect medical issues like fractures or infections in seconds, making expert-level diagnostics accessible anywhere. As one presenter put it, “You no longer need professional eyes to look at it.

Microsoft Unveils Agent Factory, NLWeb, and Copilot Tuning for the Future of AI

Microsoft is pushing AI forward with Copilot Tuning to personalize company language, the Agent Factory platform for building advanced apps, and NLWeb, a new way to browse using natural language. With Microsoft Discovery, AI now helps scientists generate ideas and simulate outcomes—speeding up breakthroughs like eco-friendly coolants.

Graph of the Day

In the “legal-benchmark” Google is in first place and even outperforms OpenAI's o3.

BLIP3-o: A Family of Fully Open Unified Multimodal Models—Architecture, Training and Dataset

AI researchers present GRIT, a new training approach for language models. Instead of just optimizing text generation, GRIT simultaneously improves how the AI represents information internally - its “understanding”, so to speak. The novelty: the AI not only learns to solve tasks better, but also to grasp new concepts more quickly. This promises smarter, more adaptable AIs for a wide range of applications, from complex problem solving to more efficient knowledge processing.

Depth Anything with Any Prior

AI training revolutionized? Researchers present PISHF, a method in which an advanced AI simulates“human” feedback for other models. The trick: instead of expensive human evaluations, the AI learns from these synthetic preferences. This drastically reduces costs and effort. This could accelerate the development of more powerful and more precise AIs and thus open up new technological possibilities.

Unlocking Non-Invasive Brain-to-Text

How to make good AI adaptation (LoRA) even better? Researchers present “Richer LoRAs”. What's new: Before specialization, small, versatile trained “base layers” are injected into the AI. This makes the specialized AI not only better at its task, but also more flexible and more “world-savvy” - with minimal additional effort. This promises more powerful, more adaptable specialized AIs for complex challenges.

Question of the Day

Quote of the Day