Dear Readers,

Today we have something special for you: an exclusive interview with Kari Briski, Vice President of Generative AI Software for Enterprise at NVIDIA.

Our conversation focuses on NVIDIA’s Nemotron — a family of open-source foundation models (weights, datasets and training recipes published) offered in multiple sizes and variants for researchers and developers to download and run. Read on to discover why NVIDIA publishes its own models in addition to hardware, and how the company envisions the next phase of AI.

Our goal at Superintelligence — and mine as Editor-in-Chief in particular — is to make the artificial intelligence revolution accessible and understandable to everyone, especially to those outside the tech bubble. This interview was conducted in that very spirit: to explain, in plain language, what Nemotron is for and why NVIDIA develops its own software alongside its hardware.

It was a great honor for me to conduct this interview with Kari, an impressive personality.

(Kari Briski, Vice President of Generative AI Software for Enterprise at NVIDIA)

With this in mind, enjoy reading!

All the best,

Nemotron at NVIDIA:

From Chips to Agentic AI

Exclusive: An interview with Kari Briski, Vice President of Generative AI Software for Enterprise at NVIDIA

Kim Isenberg: Hi, I’m Kim, editor-in-chief of Superintelligence, your source for AI insights. Today, I’m joined by Kari Briski, Vice President of Generative AI Software for Enterprise at NVIDIA —a leader driving the company’s full-stack AI strategy from NeMo and NIM to Nemotron, its family of open-weight reasoning models and data.

Kari Briski: Thank you for having me.

Kim: Let’s start at the beginning and build from there. NVIDIA has long been synonymous with compute and infrastructure. What was the moment you decided not just to power AI, but to build it?

Kari: We’ve always been building a developer ecosystem. I’ve been at NVIDIA for a little over nine years, always in software. Hardware without software is just a brick. From CUDA onward—accelerated libraries across domains—we’ve focused on the stack that lets developers actually use the compute. I think of LLMs as libraries that get integrated into applications.

Kim: Do you still see NVIDIA primarily as a hardware company, or has the mission become broader?

Kari: I haven’t viewed us as “just hardware.” The world often does, but we don’t build chips in isolation—we build systems and data centers, and the software for end-to-end operation. We call it extreme co-design: understanding and optimizing across the whole stack so it runs at scale.

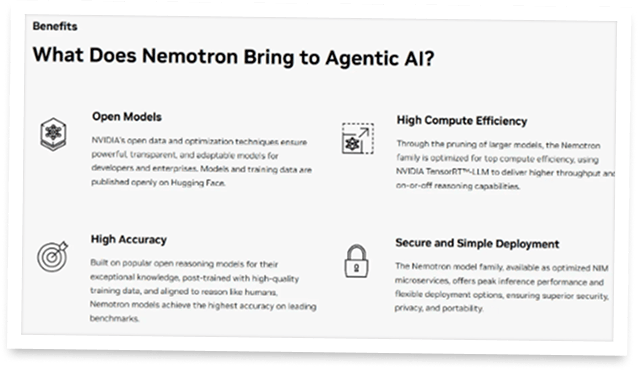

Kim: Some might say: we already have ChatGPT, Claude, Gemini, Llama—why Nemotron? What gap justified developing your own foundation models?

Kari: Nemotron didn’t happen overnight. We’d been building for years, initially for ourselves—to learn how to train efficiently and run inference at scale, because generative AI will live inside every application. Also, Nemotron isn’t “just a checkpoint.” When we say open-source, we mean: we release pre-training and post-training data (where possible), algorithms, the software to train and distill into smaller models, and the runtime to serve efficiently. It’s a developer platform, not a single file.

The Idea Behind Nemotron – Technology with a Purpose

Kim: You didn’t build a single model but a family—Nano, Super, Ultra—from laptops to data centers. Why design for multiple scales?

Kari: Real applications use many models of different sizes. Hardware varies: latency, memory, power, cost. We designed Nano to fit smaller GPUs you can get on a cloud service (e.g., ~24 GB class). Super fits a single H100. Ultra targets a single Hopper node (8 GPUs) for larger reasoning. Many frontier models need multiple nodes just to run; starting with a single node matters for practicality. We might go bigger—roadmaps evolve—but the principle stands.

Kim: Openness seems core to Nemotron’s philosophy. Is it mainly about trust and innovation, or something deeper about how AI ecosystems should evolve?

Kari: Both. It’s trust through transparency, and it’s how you attract developers. When we put datasets and tooling out, engagement actually went up—teams called us to ask why we chose certain data, how to generate more, what the roadmap is. Openness grows the ecosystem.

The AI Insights Every Decision Maker Needs

You control budgets, manage pipelines, and make decisions, but you still have trouble keeping up with everything going on in AI. If that sounds like you, don’t worry, you’re not alone – and The Deep View is here to help.

This free, 5-minute-long daily newsletter covers everything you need to know about AI. The biggest developments, the most pressing issues, and how companies from Google and Meta to the hottest startups are using it to reshape their businesses… it’s all broken down for you each and every morning into easy-to-digest snippets.

If you want to up your AI knowledge and stay on the forefront of the industry, you can subscribe to The Deep View right here (it’s free!).

The Move Toward Agentic AI:

The Next Evolution

Kim: NVIDIA talks about the era of agentic AI. In practice, how do agentic systems differ from today’s chatbots?

Kari: Traditional chatbots often look like RAG—retrieve, respond. Early “agents” were largely rules-based dialogue management. Reasoning changes that: agents plan, self-reflect, choose tools, write code or SQL, and act with a degree of autonomy. Retrieval becomes just one tool among many.

Kim: There’s growing talk of AI teammates. Do you expect agents to become true collaborators?

Kari: Yes, over time. Right now we’re still working out protocols (agent-to-agent, inter-org). Human-in-the-loop will remain for a while. Specialization is key—and verifiers matter. Coding agents took off because “did it compile/pass tests?” is a clear signal. Other domains need good verifiers too, even when answers are subjective.

Kim: With agents acting on our behalf, how do we ensure safety, control, transparency, and alignment?

Kari: It’s about the environment—the tasks and feedback you expose models to. Alongside Nemotron, we release reinforcement learning environments and training recipes. Robustness comes from both scale and experience across varied situations; hence the need for multiple model sizes and training regimes.

From Chips to Intelligence – NVIDIA’s Strategic Arc

Kim: Place Nemotron inside NVIDIA’s broader stack: are we entering a chapter where NVIDIA not only powers AI but provides ready-to-use intelligence via models and microservices? What changes for enterprises?

Kari: Think of Nemotron as an SDK for specialized AI. Some libraries are usable out-of-the-box; others need adapting. Enterprises will run many models—specialized, different sizes, guardrail models—and integrate them deeply. This is a new way to write software: new tools, skill sets, and verification pipelines.

The Future of AI – Vision and Human Meaning

Kim: Five years out—how do you imagine agents in daily life and the economy, and where does Nemotron fit?

Kari: We’ll see agents infuse more domains—healthcare, automotive, retail—and more modalities. The “last mile” is enterprises specializing and integrating agents into production software. Nemotron’s role is to be the development platform that lets them do that reliably.

Kim: On a personal note: what does it feel like to work at the frontier?

Kari: Like Christmas morning every day—you’re unwrapping something new and building fast. It’s exciting.

Kim: If you had to describe Nemotron in one sentence?

Kari: Nemtron’s mission is to be the generative AI development platform for specialized AI.

Kim: What do you most want people to understand about NVIDIA’s role in shaping the next era of AI?

Kari: We’re the AI company that works with every AI company. As we built a thriving CUDA ecosystem, we’re building the developer ecosystem for generative AI and future applications.

How You Can Get

Started with Nemotron Today

Kim: We heard many exciting impressions of Nemotron. If someone is curious and wants to try it themselves, what’s the best way to begin?

Kari: It depends on your experience level, but the easiest path is hands-on via build.nvidia.com—we host many models and reasoning UIs so you can see chains-of-thought transparency in action. Because we release openly, you can also go to Hugging Face to download checkpoints and datasets—including synthetic “persona” datasets we’ve generated for training. And there are YouTube tutorials like “how to train a reasoning model in ~48 hours.” Three low-hanging fruits: build.nvidia.com, Hugging Face, and YouTube tutorials.

Kim: You also mentioned smaller models—options for every size and need?

Kari: Yes. We’ve used our Nemotron techniques to distill smaller models even further—down to around 4-billion-parameter scale—so developers can pick the size that fits their hardware and use case.

Kim: Fantastic—people excited about AI can just download and try the open models. Amazing. It was a pleasure talking with you.

Kari: Thank you.

If you want to delve deeper into Nemotron, you can find everything you need to know at this link: