In partnership with

Dear Readers,

Sometimes the AI world tips into one of those rare moments when the rules of the game shift radically – and today is one of those days. Anthropic has expanded the context to one million tokens, which means that entire code bases, complex research series, or extensive company documents can be analyzed at once. This is not just a technical adjustment, but a paradigm shift: from small-scale interactions to holistic, project-wide thinking.

In this issue, we dive into the most exciting developments of the week: from the new open-source ARC model GLM-4.5 to advances in the ReasonRank system that teaches search engines to think logically, to the latest insights into chain-of-thought analysis. We also take a look at the growing competition between the major models—and what that means for you as a developer, researcher, or simply a curious observer. Let's get started.

In Today’s Issue:

Anthropic's Claude just got a massive memory upgrade to 1 million tokens.

Is AI's "chain of thought" a reliable window into its mind?

A new open-source model from China is here to power the next wave of AI agents.

This new AI is designed to make search engines much smarter at answering complex questions.

And more AI goodness…

All the best,

Claude Sonnet 4 now supports 1M tokens of context

The TLDR

👉 Paradigm shift in AI coding: With 1M tokens, developers can now pass on complete projects instead of code fragments to AI models for the first time, dramatically improving the quality of analyses and suggestions.

👉 Strategic competitive position: Anthropic is now on par with OpenAI and Google, increasing pressure on all providers to develop even larger context windows and better pricing models.

👉 Cost optimization becomes critical: The doubling of prices for large prompts makes intelligent prompt caching and strategic context management decisive factors for success.

👉 New application classes are emerging: Autonomous software engineering agents and comprehensive document analysis tools are becoming mainstream applications thanks to the expanded capacity of niche solutions.

The limits of what is possible in AI development have just been redefined: Claude Sonnet 4 now supports up to 1 million tokens in context on the Anthropic API – a 5-fold increase over the previous limit of 200,000 tokens. What does this mean in concrete terms? Developers can now process entire codebases with over 75,000 lines of code or dozens of research papers in a single request!

This innovation catapults the practical applications for AI-assisted development into entirely new dimensions. Imagine: instead of laboriously cherry-picking code snippets, Claude can now understand your entire project – from architecture and dependencies to documentation. This enables complete codebase analysis, processing of long documents, and persistent chats.

This marks a turning point for the AI community: Both Gemini and OpenAI have million-token models, so it's good to see Anthropic catching up. The pricing structure has been adjusted accordingly – for prompts over 200K tokens, the price effectively doubles, but prompt caching can be used to optimize costs and latency.

Things are getting particularly exciting with context-aware agents: these can now manage hundreds of tool calls and multi-step workflows without losing track. This exponentially expands the possibilities for autonomous software development, as companies such as iGent AI are already exploring with their Maestro system.

How will this development change the way we work with AI? What new workflow paradigms will emerge as a result?

Why it matters: This dramatic expansion of the context window fundamentally changes how developers can work with AI tools—from fragmented interactions to holistic, project-wide analysis. Anthropic's move could encourage Google Gemini users to give Claude another chance, further intensifying competition in the AI market.

Sources:

Ad

Love Hacker News but don’t have the time to read it every day? Try TLDR’s free daily newsletter.

TLDR covers the best tech, startup, and coding stories in a quick email that takes 5 minutes to read.

No politics, sports, or weather (we promise). And it's read by over 1,250,000 people!

Subscribe for free now and you'll get our next newsletter tomorrow morning.

In The News

NVIDIA Releases Massive Vision Dataset

NVIDIA has released a new open-source dataset with 3 million high-quality samples to help researchers train vision language models for tasks like OCR, visual question answering, and captioning.

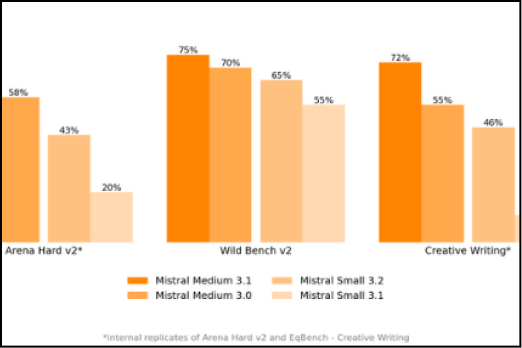

Mistral Upgrades Medium Model

Mistral AI has launched Mistral Medium 3.1, a new model with an overall performance boost, improved tone, and smarter web searches, now available as the default in Le Chat and via their API.

Skywork Releases Open-Source World Model

Just a week after DeepMind's closed-source Genie 3, Skywork has released Matrix-Game 2.0, the first open-source, real-time interactive world model capable of minutes-long interaction at 25 FPS.

Graph of the Day

GPT-5 now listed as GPT-5-high on lmarena. A version not even accessible in ChatGPT.

CoT May Be Highly Informative Despite “Unfaithfulness”

The METR team examines how meaningful chain-of-thought (CoT) protocols are despite possible “unfaithfulness.” Replicated and expanded tests (free responses, more complex clues) show that When tasks require CoT, CoTs almost always reflect the decisive thinking; in addition, a simple detector with a ≈99% hit rate can recognize whether a hint was used. Relevance: CoT analyses are useful as security monitoring for risky planning/deception – important for governance, audits, and product approvals for future AI.

GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models

Researchers at Zhipu AI and Tsinghua University have unveiled GLM-4.5, a new, powerful open-source language model. What makes it special is that it was developed specifically for demanding tasks in the areas of autonomous action (agentic), logical reasoning, and programming (coding).Thanks to a novel “mixture-of-experts” architecture, the model works extremely efficiently and can compete with leading proprietary models. This opens up new possibilities for smarter, autonomous AI systems and makes cutting-edge research accessible to a broader community of developers.

ReasonRank: Empowering Passage Ranking with Strong Reasoning Ability

Researchers have developed ReasonRank, an AI that drastically improves search results for complex queries where simple keyword matching fails. The key feature is a system that automatically generates high-quality training data for logical thinking – a previous bottleneck in AI research. Through innovative, two-stage training, the model learns to deeply understand and justify connections. This enables more precise answers to challenging questions in science and technology and makes search engines smarter research assistants.

Get Your AI Research Seen by 200,000+ People

Have groundbreaking AI research? We’re inviting researchers to submit their work to be featured in Superintelligence, the leading AI newsletter with 200k+ readers. If you’ve published a relevant paper on arXiv.org, email the link to [email protected] with the subject line “Research Submission”. If selected, we will contact you for a potential feature.

Question of the Day

Which Model do you prefer: GPT-5 or Claude 4.1?

Tweet of the Day

Fact-based news without bias awaits. Make 1440 your choice today.

Overwhelmed by biased news? Cut through the clutter and get straight facts with your daily 1440 digest. From politics to sports, join millions who start their day informed.

Rumours, Leaks, and Dustups

Elon Musk and Sam Altman are currently embroiled in a fierce mudslinging match, accusing each other of manipulation.

Will DeepSeek r2 be revealed in the next two weeks? The first leaks are starting to surface.